Difference between revisions of "Maxima and Minima Problems"

| (5 intermediate revisions by 2 users not shown) | |||

| Line 54: | Line 54: | ||

==Saddle Point== | ==Saddle Point== | ||

| − | [[File:Saddle point.svg|thumb|right|300px|A saddle point (in red) on the graph of z=x<sup>2</sup>−y<sup>2</sup> ( | + | [[File:Saddle point.svg|thumb|right|300px|A saddle point (in red) on the graph of z=x<sup>2</sup>−y<sup>2</sup> (hyperbolic paraboloid)]] |

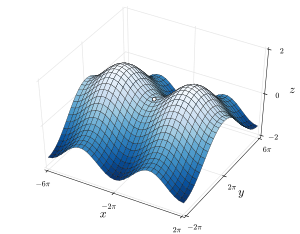

[[File:Saddle_Point_between_maxima.svg|thumb|300px|right|Saddle point between two hills (the intersection of the figure-eight <math>z</math>-contour)]] | [[File:Saddle_Point_between_maxima.svg|thumb|300px|right|Saddle point between two hills (the intersection of the figure-eight <math>z</math>-contour)]] | ||

| Line 70: | Line 70: | ||

\end{bmatrix} | \end{bmatrix} | ||

</math> | </math> | ||

| − | which is indefinite. Therefore, this point is a saddle point. This criterion gives only a sufficient condition. For example, the point <math>(0, 0, 0)</math> is a saddle point for the function <math>z=x^4-y^4,</math> but the Hessian matrix of this function at the origin is the | + | which is indefinite. Therefore, this point is a saddle point. This criterion gives only a sufficient condition. For example, the point <math>(0, 0, 0)</math> is a saddle point for the function <math>z=x^4-y^4,</math> but the Hessian matrix of this function at the origin is the null matrix, which is not indefinite. |

In the most general terms, a '''saddle point''' for a smooth function (whose graph is a curve, surface or hypersurface) is a stationary point such that the curve/surface/etc. in the neighborhood of that point is not entirely on any side of the tangent space at that point. | In the most general terms, a '''saddle point''' for a smooth function (whose graph is a curve, surface or hypersurface) is a stationary point such that the curve/surface/etc. in the neighborhood of that point is not entirely on any side of the tangent space at that point. | ||

| Line 101: | Line 101: | ||

A saddle point of a matrix is an element which is both the largest element in its column and the smallest element in its row. | A saddle point of a matrix is an element which is both the largest element in its column and the smallest element in its row. | ||

| + | |||

| + | ==Extrema for functions of two variables== | ||

| + | |||

| + | Suppose that {{math|''f''(''x'', ''y'')}} is a differentiable real function of two variables whose second partial derivatives exist and are continuous. The Hessian matrix {{mvar|H}} of {{mvar|f}} is the 2 × 2 matrix of partial derivatives of {{mvar|f}}: | ||

| + | <math display="block">H(x,y) = \begin{bmatrix} | ||

| + | f_{xx}(x,y) &f_{xy}(x,y)\\ | ||

| + | f_{yx}(x,y) &f_{yy}(x,y) | ||

| + | \end{bmatrix}.</math> | ||

| + | |||

| + | Define {{math|''D''(''x'', ''y'')}} to be the determinant | ||

| + | <math display="block">D(x,y)=\det(H(x,y)) = f_{xx}(x,y)f_{yy}(x,y) - \left( f_{xy}(x,y) \right)^2. </math> | ||

| + | of {{mvar|H}}. Finally, suppose that {{math|(''a'', ''b'')}} is a critical point of {{mvar|f}} (that is, {{math|1=''f''<sub>''x''</sub>(''a'', ''b'') = ''f''<sub>''y''</sub>(''a'', ''b'') =}} 0). Then the second partial derivative test asserts the following: | ||

| + | |||

| + | #If {{math|''D''(''a'', ''b'') > 0}} and {{math|''f<sub>xx</sub>''(''a'', ''b'') > 0}} then {{math|(''a'', ''b'')}} is a local minimum of {{mvar|f}}. | ||

| + | #If {{math|''D''(''a'', ''b'') > 0}} and {{math|''f<sub>xx</sub>''(''a'', ''b'') < 0}} then {{math|(''a'', ''b'')}} is a local maximum of {{mvar|f}}. | ||

| + | #If {{math|''D''(''a'', ''b'') < 0}} then {{math|(''a'', ''b'')}} is a saddle point of {{mvar|f}}. | ||

| + | #If {{math|1=''D''(''a'', ''b'') = 0}} then the second derivative test is inconclusive, and the point {{math|(''a'', ''b'')}} could be any of a minimum, maximum or saddle point. | ||

| + | |||

| + | Sometimes other equivalent versions of the test are used. Note that in cases 1 and 2, the requirement that {{math|''f<sub>xx</sub>'' ''f<sub>yy</sub>'' − ''f<sub>xy</sub>''<sup>2</sup>}} is positive at {{math|(''x'', ''y'')}} implies that {{mvar|f<sub>xx</sub>}} and {{mvar|f<sub>yy</sub>}} have the same sign there. Therefore the second condition, that {{mvar|f<sub>xx</sub>}} be greater (or less) than zero, could equivalently be that {{mvar|f<sub>yy</sub>}} or {{math|1=tr(''H'') = ''f<sub>xx</sub>'' + ''f<sub>yy</sub>''}} be greater (or less) than zero at that point. | ||

| + | |||

| + | ===Functions of many variables=== | ||

| + | For a function ''f'' of three or more variables, there is a generalization of the rule above. In this context, instead of examining the determinant of the Hessian matrix, one must look at the eigenvalues of the Hessian matrix at the critical point. The following test can be applied at any critical point ''a'' for which the Hessian matrix is invertible: | ||

| + | |||

| + | # If the Hessian is positive definite (equivalently, has all eigenvalues positive) at ''a'', then ''f'' attains a local minimum at ''a''. | ||

| + | # If the Hessian is negative definite (equivalently, has all eigenvalues negative) at ''a'', then ''f'' attains a local maximum at ''a''. | ||

| + | # If the Hessian has both positive and negative eigenvalues then ''a'' is a saddle point for ''f'' (and in fact this is true even if ''a'' is degenerate). | ||

| + | |||

| + | In those cases not listed above, the test is inconclusive. | ||

| + | |||

| + | For functions of three or more variables, the ''determinant'' of the Hessian does not provide enough information to classify the critical point, because the number of jointly sufficient second-order conditions is equal to the number of variables, and the sign condition on the determinant of the Hessian is only one of the conditions. Note that in the one-variable case, the Hessian condition simply gives the usual second derivative test. | ||

| + | |||

| + | In the two variable case, <math>D(a, b)</math> and <math>f_{xx}(a,b)</math> are the principal minors of the Hessian. The first two conditions listed above on the signs of these minors are the conditions for the positive or negative definiteness of the Hessian. For the general case of an arbitrary number ''n'' of variables, there are ''n'' sign conditions on the ''n'' principal minors of the Hessian matrix that together are equivalent to positive or negative definiteness of the Hessian (Sylvester's criterion): for a local minimum, all the principal minors need to be positive, while for a local maximum, the minors with an odd number of rows and columns need to be negative and the minors with an even number of rows and columns need to be positive. | ||

==Resources== | ==Resources== | ||

| Line 106: | Line 138: | ||

*[https://www.youtube.com/watch?v=Hg38kfK5w4E Absolute Maximum and Minimum Values of Multivariable Functions - Calculus 3] Video by The Organic Chemistry Tutor 2019 | *[https://www.youtube.com/watch?v=Hg38kfK5w4E Absolute Maximum and Minimum Values of Multivariable Functions - Calculus 3] Video by The Organic Chemistry Tutor 2019 | ||

| + | |||

| + | ==Licensing== | ||

| + | Content obtained and/or adapted from: | ||

| + | * [https://en.wikibooks.org/wiki/Calculus/Extrema_and_Points_of_Inflection Extrema and Points of Inflection, Wikibooks: Calculus] under a CC BY-SA license | ||

| + | * [https://en.wikipedia.org/wiki/Second_partial_derivative_test Second partial derivative test, Wikipedia] under a CC BY-SA license | ||

| + | * [https://en.wikipedia.org/wiki/Saddle_point Saddle point, Wikipedia] under a CC BY-SA license | ||

Latest revision as of 15:49, 2 November 2021

Maxima and minima are points where a function reaches a highest or lowest value, respectively. There are two kinds of extrema (a word meaning maximum or minimum): global and local, sometimes referred to as "absolute" and "relative", respectively. A global maximum is a point that takes the largest value on the entire range of the function, while a global minimum is the point that takes the smallest value on the range of the function. On the other hand, local extrema are the largest or smallest values of the function in the immediate vicinity.

In many cases, extrema look like the crest of a hill or the bottom of a bowl on a graph of the function. A global extremum is always a local extremum too, because it is the largest or smallest value on the entire range of the function, and therefore also its vicinity. It is also possible to have a function with no extrema, global or local: is a simple example.

At any extremum, the slope of the graph is necessarily 0 (or is undefined, as in the case of ), as the graph must stop rising or falling at an extremum, and begin to head in the opposite direction. Because of this, extrema are also commonly called stationary points or turning points. Therefore, the first derivative of a function is equal to 0 at extrema. If the graph has one or more of these stationary points, these may be found by setting the first derivative equal to 0 and finding the roots of the resulting equation.

However, a slope of zero does not guarantee a maximum or minimum: there is a third class of stationary point called a saddle point. Consider the function

The derivative is

The slope at is 0. We have a slope of 0, but while this makes it a stationary point, this doesn't mean that it is a maximum or minimum. Looking at the graph of the function you will see that is neither, it's just a spot at which the function flattens out. True extrema require a sign change in the first derivative. This makes sense - you have to rise (positive slope) to and fall (negative slope) from a maximum. In between rising and falling, on a smooth curve, there will be a point of zero slope - the maximum. A minimum would exhibit similar properties, just in reverse.

This leads to a simple method to classify a stationary point - plug x values slightly left and right into the derivative of the function. If the results have opposite signs then it is a true maximum/minimum. You can also use these slopes to figure out if it is a maximum or a minimum: the left side slope will be positive for a maximum and negative for a minimum. However, you must exercise caution with this method, as, if you pick a point too far from the extremum, you could take it on the far side of another extremum and incorrectly classify the point.

Contents

The Extremum Test

A more rigorous method to classify a stationary point is called the extremum test, or 2nd Derivative Test. As we mentioned before, the sign of the first derivative must change for a stationary point to be a true extremum. Now, the second derivative of the function tells us the rate of change of the first derivative. It therefore follows that if the second derivative is positive at the stationary point, then the gradient is increasing. The fact that it is a stationary point in the first place means that this can only be a minimum. Conversely, if the second derivative is negative at that point, then it is a maximum.

Now, if the second derivative is 0, we have a problem. It could be a point of inflexion, or it could still be an extremum. Examples of each of these cases are below - all have a second derivative equal to 0 at the stationary point in question:

- has a point of inflexion at

- has a minimum at

- has a maximum at

However, this is not an insoluble problem. What we must do is continue to differentiate until we get, at the th derivative, a non-zero result at the stationary point:

If is odd, then the stationary point is a true extremum. If the th derivative is positive, it is a minimum; if the th derivative is negative, it is a maximum. If is even, then the stationary point is a point of inflexion.

As an example, let us consider the function

We now differentiate until we get a non-zero result at the stationary point at (assume we have already found this point as usual):

Therefore, is 4, so is 3. This is odd, and the fourth derivative is negative, so we have a maximum. Note that none of the methods given can tell you if this is a global extremum or just a local one. To do this, you would have to set the function equal to the height of the extremum and look for other roots.

Critical Points

Critical points are the points where a function's derivative is 0 or not defined. Suppose we are interested in finding the maximum or minimum on given closed interval of a function that is continuous on that interval. The extreme values of the function on that interval will be at one or more of the critical points and/or at one or both of the endpoints. We can prove this by contradiction. Suppose that the function has maximum at a point in the interval where the derivative of the function is defined and not . If the derivative is positive, then values slightly greater than will cause the function to increase. Since is not an endpoint, at least some of these values are in . But this contradicts the assumption that is the maximum of for in . Similarly, if the derivative is negative, then values slightly less than will cause the function to increase. Since is not an endpoint, at least some of these values are in . This contradicts the assumption that is the maximum of for in . A similar argument could be made for the minimum.

Example 1

Consider the function on the interval . The unrestricted function has no maximum or minimum. On the interval , however, it is obvious that the minimum will be , which occurs at and the maximum will be , which occurs at . Since there are no critical points ( exists and equals everywhere), the extreme values must occur at the endpoints.

Example 2

Find the maximum and minimum of the function on the interval .

First start by finding the roots of the function derivative:

- Now evaluate the function at all critical points and endpoints to find the extreme values.

- From this we can see that the minimum on the interval is -24 when and the maximum on the interval is when

Saddle Point

In mathematics, a saddle point or minimax point is a point on the surface of the graph of a function where the slopes (derivatives) in orthogonal directions are all zero (a critical point), but which is not a local extremum of the function. An example of a saddle point is when there is a critical point with a relative minimum along one axial direction (between peaks) and at a relative maximum along the crossing axis. However, a saddle point need not be in this form. For example, the function has a critical point at that is a saddle point since it is neither a relative maximum nor relative minimum, but it does not have a relative maximum or relative minimum in the -direction.

The name derives from the fact that the prototypical example in two dimensions is a surface that curves up in one direction, and curves down in a different direction, resembling a riding saddle or a mountain pass between two peaks forming a landform saddle. In terms of contour lines, a saddle point in two dimensions gives rise to a contour graph or trace in which the contour corresponding to the saddle point's value appears to intersect itself.

Mathematical discussion

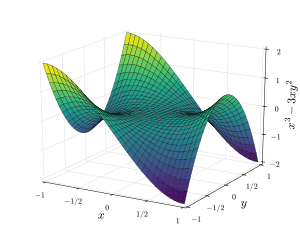

A simple criterion for checking if a given stationary point of a real-valued function F(x,y) of two real variables is a saddle point is to compute the function's Hessian matrix at that point: if the Hessian is indefinite, then that point is a saddle point. For example, the Hessian matrix of the function at the stationary point is the matrix

which is indefinite. Therefore, this point is a saddle point. This criterion gives only a sufficient condition. For example, the point is a saddle point for the function but the Hessian matrix of this function at the origin is the null matrix, which is not indefinite.

In the most general terms, a saddle point for a smooth function (whose graph is a curve, surface or hypersurface) is a stationary point such that the curve/surface/etc. in the neighborhood of that point is not entirely on any side of the tangent space at that point.

In a domain of one dimension, a saddle point is a point which is both a stationary point and a point of inflection. Since it is a point of inflection, it is not a local extremum.

Saddle surface

A saddle surface is a smooth surface containing one or more saddle points.

Classical examples of two-dimensional saddle surfaces in the Euclidean space are second order surfaces, the hyperbolic paraboloid (which is often referred to as "the saddle surface" or "the standard saddle surface") and the hyperboloid of one sheet. The Pringles potato chip or crisp is an everyday example of a hyperbolic paraboloid shape.

Saddle surfaces have negative Gaussian curvature which distinguish them from convex/elliptical surfaces which have positive Gaussian curvature. A classical third-order saddle surface is the monkey saddle.

Examples

In a two-player zero sum game defined on a continuous space, the equilibrium point is a saddle point.

For a second-order linear autonomous system, a critical point is a saddle point if the characteristic equation has one positive and one negative real eigenvalue.

In optimization subject to equality constraints, the first-order conditions describe a saddle point of the Lagrangian.

Other uses

In dynamical systems, if the dynamic is given by a differentiable map f then a point is hyperbolic if and only if the differential of ƒ n (where n is the period of the point) has no eigenvalue on the (complex) unit circle when computed at the point. Then a saddle point is a hyperbolic periodic point whose stable and unstable manifolds have a dimension that is not zero.

A saddle point of a matrix is an element which is both the largest element in its column and the smallest element in its row.

Extrema for functions of two variables

Suppose that f(x, y) is a differentiable real function of two variables whose second partial derivatives exist and are continuous. The Hessian matrix H of f is the 2 × 2 matrix of partial derivatives of f:

Define D(x, y) to be the determinant

- If D(a, b) > 0 and fxx(a, b) > 0 then (a, b) is a local minimum of f.

- If D(a, b) > 0 and fxx(a, b) < 0 then (a, b) is a local maximum of f.

- If D(a, b) < 0 then (a, b) is a saddle point of f.

- If D(a, b) = 0 then the second derivative test is inconclusive, and the point (a, b) could be any of a minimum, maximum or saddle point.

Sometimes other equivalent versions of the test are used. Note that in cases 1 and 2, the requirement that fxx fyy − fxy2 is positive at (x, y) implies that fxx and fyy have the same sign there. Therefore the second condition, that fxx be greater (or less) than zero, could equivalently be that fyy or tr(H) = fxx + fyy be greater (or less) than zero at that point.

Functions of many variables

For a function f of three or more variables, there is a generalization of the rule above. In this context, instead of examining the determinant of the Hessian matrix, one must look at the eigenvalues of the Hessian matrix at the critical point. The following test can be applied at any critical point a for which the Hessian matrix is invertible:

- If the Hessian is positive definite (equivalently, has all eigenvalues positive) at a, then f attains a local minimum at a.

- If the Hessian is negative definite (equivalently, has all eigenvalues negative) at a, then f attains a local maximum at a.

- If the Hessian has both positive and negative eigenvalues then a is a saddle point for f (and in fact this is true even if a is degenerate).

In those cases not listed above, the test is inconclusive.

For functions of three or more variables, the determinant of the Hessian does not provide enough information to classify the critical point, because the number of jointly sufficient second-order conditions is equal to the number of variables, and the sign condition on the determinant of the Hessian is only one of the conditions. Note that in the one-variable case, the Hessian condition simply gives the usual second derivative test.

In the two variable case, and are the principal minors of the Hessian. The first two conditions listed above on the signs of these minors are the conditions for the positive or negative definiteness of the Hessian. For the general case of an arbitrary number n of variables, there are n sign conditions on the n principal minors of the Hessian matrix that together are equivalent to positive or negative definiteness of the Hessian (Sylvester's criterion): for a local minimum, all the principal minors need to be positive, while for a local maximum, the minors with an odd number of rows and columns need to be negative and the minors with an even number of rows and columns need to be positive.

Resources

- Local Extrema, Critical Points, & Saddle Points of Multivariable Functions - Calculus 3- Video by he Organic Chemistry Tutor 2019

- Absolute Maximum and Minimum Values of Multivariable Functions - Calculus 3 Video by The Organic Chemistry Tutor 2019

Licensing

Content obtained and/or adapted from:

- Extrema and Points of Inflection, Wikibooks: Calculus under a CC BY-SA license

- Second partial derivative test, Wikipedia under a CC BY-SA license

- Saddle point, Wikipedia under a CC BY-SA license

![{\displaystyle [a,b]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9c4b788fc5c637e26ee98b45f89a5c08c85f7935)

![{\displaystyle [-1,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/51e3b7f14a6f70e614728c583409a0b9a8b9de01)

![{\displaystyle [-3,3]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3a869f6f7ff84a95f888c6b8705e2465ba21960e)