Newton's Method

In numerical analysis, Newton's method, also known as the Newton–Raphson method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots (or zeroes) of a real-valued function. The most basic version starts with a single-variable function f defined for a real variable x, the function's derivative f′, and an initial guess x0 for a root of f. If the function satisfies sufficient assumptions and the initial guess is close, then

is a better approximation of the root than x0. Geometrically, (x1, 0) is the intersection of the x-axis and the tangent of the graph of f at (x0, f(x0)): that is, the improved guess is the unique root of the linear approximation at the initial point. The process is repeated as

until a sufficiently precise value is reached. This algorithm is first in the class of Householder's methods, succeeded by Halley's method. The method can also be extended to complex functions and to systems of equations.

Description

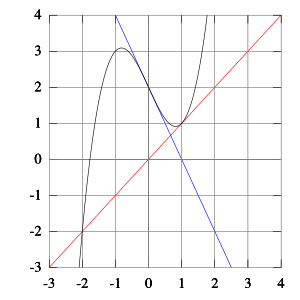

The idea is to start with an initial guess which is reasonably close to the true root, then to approximate the function by its tangent line using calculus, and finally to compute the x-intercept of this tangent line by elementary algebra. This x-intercept will typically be a better approximation to the original function's root than the first guess, and the method can be iterated.

More formally, suppose f : (a, b) → ℝ is a differentiable function defined on the interval (a, b) with values in the real numbers ℝ, and we have some current approximation xn. Then we can derive the formula for a better approximation, xn + 1 by referring to the diagram on the right. The equation of the tangent line to the curve at is

where 'f′ denotes the derivative. The x-intercept of this line (the value of x which makes y = 0) is taken as the next approximation, xn + 1, to the root, so that the equation of the tangent line is satisfied when (x, y) = (xn + 1, 0):

Solving for xn + 1 gives

We start the process with some arbitrary initial value x0. (The closer to the zero, the better. But, in the absence of any intuition about where the zero might lie, a "guess and check" method might narrow the possibilities to a reasonably small interval by appealing to the intermediate value theorem.) The method will usually converge, provided this initial guess is close enough to the unknown zero, and that f′(x0) ≠ 0. Furthermore, for a zero of multiplicity 1, the convergence is at least quadratic (see rate of convergence) in a neighborhood of the zero, which intuitively means that the number of correct digits roughly doubles in every step. More details can be found in the analysis section below.

Householder's methods are similar but have higher order for even faster convergence. However, the extra computations required for each step can slow down the overall performance relative to Newton's method, particularly if f or its derivatives are computationally expensive to evaluate.

Analysis

Suppose that the function f has a zero at α, i.e., , and f is differentiable in a neighborhood of α.

If f is continuously differentiable and its derivative is nonzero at α, then there exists a neighborhood of α such that for all starting values x0 in that neighborhood, the sequence (xn) will converge to α.

If the function is continuously differentiable and its derivative is not 0 at α and it has a second derivative at α then the convergence is quadratic or faster. If the second derivative is not 0 at α then the convergence is merely quadratic. If the third derivative exists and is bounded in a neighborhood of α, then:

where

If the derivative is 0 at α, then the convergence is usually only linear. Specifically, if f is twice continuously differentiable, and f″(α) ≠ 0, then there exists a neighborhood of α such that, for all starting values x0 in that neighborhood, the sequence of iterates converges linearly, with rate . Alternatively, if and f′(x) ≠ 0 for x ≠ α, x in a neighborhood U of α, α being a zero of multiplicity r, and if f ∈ Cr(U), then there exists a neighborhood of α such that, for all starting values x0 in that neighborhood, the sequence of iterates converges linearly.

However, even linear convergence is not guaranteed in pathological situations.

In practice, these results are local, and the neighborhood of convergence is not known in advance. But there are also some results on global convergence: for instance, given a right neighborhood U+ of α, if f is twice differentiable in U+ and if f′ ≠ 0, f · f″ > 0 in U+, then, for each x0 in U+ the sequence xk is monotonically decreasing to α.

Proof of quadratic convergence for Newton's iterative method

According to Taylor's theorem, any function f(x) which has a continuous second derivative can be represented by an expansion about a point that is close to a root of f(x). Suppose this root is α. Then the expansion of f(α) about xn is:

- (1)

where the Lagrange form of the Taylor series expansion remainder is

where ξn is in between xn and α.

Since α is the root, (1) becomes:

- (2)

Dividing equation (2) by f′(xn) and rearranging gives

- (3)

Remembering that xn + 1 is defined by

- (4)

one finds that

That is,

- (5)

Taking the absolute value of both sides gives

- (6)

Equation (6) shows that the rate of convergence is at least quadratic if the following conditions are satisfied:

- f′(x) ≠ 0; for all x ∈ I, where I is the interval [α − r, α + r] for some r ≥ |α − x0|;

- f″(x) is continuous, for all x ∈ I;

- x0 is sufficiently close to the root α.

The term sufficiently close in this context means the following:

- a. Taylor approximation is accurate enough such that we can ignore higher order terms;

- b. |

- for some C < ∞;

- c.

- for n ∈ ℤ, n ≥ 0 and C satisfying condition b.

Finally, (6) can be expressed in the following way:

where M is the supremum of the variable coefficient of εn2 on the interval I defined in condition 1, that is:

The initial point x0 has to be chosen such that conditions 1 to 3 are satisfied, where the third condition requires that M |ε0| < 1.

Basins of attraction

The disjoint subsets of the basins of attraction—the regions of the real number line such that within each region iteration from any point leads to one particular root—can be infinite in number and arbitrarily small. For example, for the function , the following initial conditions are in successive basins of attraction:

2.352 875 27 converges to 4; 2.352 841 72 converges to −3; 2.352 837 35 converges to 4; 2.352 836 327 converges to −3; 2.352 836 323 converges to 1.

Failure analysis

Newton's method is only guaranteed to converge if certain conditions are satisfied. If the assumptions made in the proof of quadratic convergence are met, the method will converge. For the following subsections, failure of the method to converge indicates that the assumptions made in the proof were not met.

Bad starting points

In some cases the conditions on the function that are necessary for convergence are satisfied, but the point chosen as the initial point is not in the interval where the method converges. This can happen, for example, if the function whose root is sought approaches zero asymptotically as x goes to ∞ or −∞. In such cases a different method, such as bisection, should be used to obtain a better estimate for the zero to use as an initial point.

Iteration point is stationary

Consider the function:

It has a maximum at x = 0 and solutions of f(x) = 0 at x = ±1. If we start iterating from the stationary point x0 = 0 (where the derivative is zero), x1 will be undefined, since the tangent at (0, 1) is parallel to the x-axis:

The same issue occurs if, instead of the starting point, any iteration point is stationary. Even if the derivative is small but not zero, the next iteration will be a far worse approximation.

Starting point enters a cycle

For some functions, some starting points may enter an infinite cycle, preventing convergence. Let

and take 0 as the starting point. The first iteration produces 1 and the second iteration returns to 0 so the sequence will alternate between the two without converging to a root. In fact, this 2-cycle is stable: there are neighborhoods around 0 and around 1 from which all points iterate asymptotically to the 2-cycle (and hence not to the root of the function). In general, the behavior of the sequence can be very complex (see Newton fractal). The real solution of this equation is −1.769 292 35….

Derivative issues

If the function is not continuously differentiable in a neighborhood of the root then it is possible that Newton's method will always diverge and fail, unless the solution is guessed on the first try.

Derivative does not exist at root

A simple example of a function where Newton's method diverges is trying to find the cube root of zero. The cube root is continuous and infinitely differentiable, except for , where its derivative is undefined:

For any iteration point xn, the next iteration point will be:

The algorithm overshoots the solution and lands on the other side of the y-axis, farther away than it initially was; applying Newton's method actually doubles the distances from the solution at each iteration.

In fact, the iterations diverge to infinity for every , where 0 < α < . In the limiting case of (square root), the iterations will alternate indefinitely between points x0 and −x0, so they do not converge in this case either.

Discontinuous derivative

If the derivative is not continuous at the root, then convergence may fail to occur in any neighborhood of the root. Consider the function

Its derivative is:

Within any neighborhood of the root, this derivative keeps changing sign as x approaches 0 from the right (or from the left) while f(x) ≥ x − x2 > 0 for 0 < x < 1.

So is unbounded near the root, and Newton's method will diverge almost everywhere in any neighborhood of it, even though:

- the function is differentiable (and thus continuous) everywhere;

- the derivative at the root is nonzero;

- f is infinitely differentiable except at the root; and

- the derivative is bounded in a neighborhood of the root (unlike ).

Non-quadratic convergence

In some cases the iterates converge but do not converge as quickly as promised. In these cases simpler methods converge just as quickly as Newton's method.

Zero derivative

If the first derivative is zero at the root, then convergence will not be quadratic. Let

then and consequently

So convergence is not quadratic, even though the function is infinitely differentiable everywhere.

Similar problems occur even when the root is only "nearly" double. For example, let

Then the first few iterations starting at x0 = 1 are

- x0 = 1

- x1 = 0.500 250 376…

- x2 = 0.251 062 828…

- x3 = 0.127 507 934…

- x4 = 0.067 671 976…

- x5 = 0.041 224 176…

- x6 = 0.032 741 218…

- x7 = 0.031 642 362…

it takes six iterations to reach a point where the convergence appears to be quadratic.

No second derivative

If there is no second derivative at the root, then convergence may fail to be quadratic. Let

Then

And

except when x = 0 where it is undefined. Given xn,

which has approximately times as many bits of precision as xn has. This is less than the 2 times as many which would be required for quadratic convergence. So the convergence of Newton's method (in this case) is not quadratic, even though: the function is continuously differentiable everywhere; the derivative is not zero at the root; and f is infinitely differentiable except at the desired root.

Licensing

Content obtained and/or adapted from:

- Newton's Method, Wikipedia under a CC BY-SA license

![{\displaystyle f(x)={\sqrt[{3}]{x}}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0d0572ba8a8d137d46b3aee3602d7d5b99b549ee)