Difference between revisions of "Orthonormal Bases and the Gram-Schmidt Process"

(Created page with "The prior subsection suggests that projecting onto the line spanned by <math> \vec{s} </math> decomposes a vector <math>\vec{v}</math> into two parts <center> <TABLE border=0p...") |

|||

| Line 13: | Line 13: | ||

We will now develop that suggestion. | We will now develop that suggestion. | ||

| − | + | <blockquote style="background: white; border: 1px solid black; padding: 1em;"> | |

| − | + | '''Definition 2.1''' | |

| − | Vectors <math> \vec{v}_1,\dots,\vec{v}_k\in\mathbb{R}^n </math> are '''mutually orthogonal''' when any two are orthogonal: if <math> i\neq j </math> then the dot product <math> \vec{v}_i\cdot\vec{v}_j </math> is zero. | + | :Vectors <math> \vec{v}_1,\dots,\vec{v}_k\in\mathbb{R}^n </math> are '''mutually orthogonal''' when any two are orthogonal: if <math> i\neq j </math> then the dot product <math> \vec{v}_i\cdot\vec{v}_j </math> is zero. |

| − | + | </blockquote> | |

| − | + | <blockquote style="background: white; border: 1px solid black; padding: 1em;"> | |

| − | + | '''Theorem 2.2''' | |

| − | If the vectors in a set <math> \{\vec{v}_1,\dots,\vec{v}_k\}\subset\mathbb{R}^n </math> are mutually orthogonal and nonzero then that set is linearly independent. | + | : If the vectors in a set <math> \{\vec{v}_1,\dots,\vec{v}_k\}\subset\mathbb{R}^n </math> are mutually orthogonal and nonzero then that set is linearly independent. |

| − | + | </blockquote> | |

| − | + | Proof: | |

| − | |||

Consider a linear relationship | Consider a linear relationship | ||

<math> c_1\vec{v}_1+c_2\vec{v}_2+\dots+c_k\vec{v}_k=\vec{0} </math>. | <math> c_1\vec{v}_1+c_2\vec{v}_2+\dots+c_k\vec{v}_k=\vec{0} </math>. | ||

| Line 38: | Line 37: | ||

shows, since <math> \vec{v}_i </math> is nonzero, that <math> c_i </math> is zero. | shows, since <math> \vec{v}_i </math> is nonzero, that <math> c_i </math> is zero. | ||

| − | |||

| − | + | <blockquote style="background: white; border: 1px solid black; padding: 1em;"> | |

| − | + | '''Corollary 2.3''' | |

| − | If the vectors in a size <math> k </math> subset of a <math>k</math> dimensional space are mutually orthogonal and nonzero then that set is a basis for the space. | + | : If the vectors in a size <math> k </math> subset of a <math>k</math> dimensional space are mutually orthogonal and nonzero then that set is a basis for the space. |

| − | + | </blockquote> | |

| − | + | Proof: | |

| − | |||

Any linearly independent size <math> k </math> subset of a <math>k</math> dimensional space is a basis. | Any linearly independent size <math> k </math> subset of a <math>k</math> dimensional space is a basis. | ||

| − | |||

| − | Of course, the converse of | + | Of course, the converse of Corollary 2.3 |

does not hold— not every basis of every subspace | does not hold— not every basis of every subspace | ||

of <math>\mathbb{R}^n</math> is made of mutually orthogonal vectors. | of <math>\mathbb{R}^n</math> is made of mutually orthogonal vectors. | ||

| Line 57: | Line 53: | ||

consisting of mutually orthogonal vectors. | consisting of mutually orthogonal vectors. | ||

| − | + | <blockquote style="background: white; border: 1px solid black; padding: 1em;"> | |

| − | + | '''Example 2.4''': | |

The members <math>\vec{\beta}_1</math> and <math>\vec{\beta}_2</math> of this basis for <math>\mathbb{R}^2</math> | The members <math>\vec{\beta}_1</math> and <math>\vec{\beta}_2</math> of this basis for <math>\mathbb{R}^2</math> | ||

are not orthogonal. | are not orthogonal. | ||

| Line 92: | Line 88: | ||

</center> | </center> | ||

which leaves the part, <math>\vec{\kappa}_2</math> pictured above, of <math>\vec{\beta}_2</math> that is orthogonal to <math>\vec{\kappa}_1</math> (it is orthogonal by the definition of the projection onto the span of <math>\vec{\kappa}_1</math>). Note that, by the corollary, <math>\{\vec{\kappa}_1,\vec{\kappa}_2\}</math> is a basis for <math>\mathbb{R}^2</math>. | which leaves the part, <math>\vec{\kappa}_2</math> pictured above, of <math>\vec{\beta}_2</math> that is orthogonal to <math>\vec{\kappa}_1</math> (it is orthogonal by the definition of the projection onto the span of <math>\vec{\kappa}_1</math>). Note that, by the corollary, <math>\{\vec{\kappa}_1,\vec{\kappa}_2\}</math> is a basis for <math>\mathbb{R}^2</math>. | ||

| − | + | </blockquote> | |

| − | + | <blockquote style="background: white; border: 1px solid black; padding: 1em;"> | |

| − | + | '''Definition 2.5''': | |

| − | An '''orthogonal basis''' for a vector space is a basis of mutually orthogonal vectors. | + | :An '''orthogonal basis''' for a vector space is a basis of mutually orthogonal vectors. |

| − | + | </blockquote> | |

| − | + | <blockquote style="background: white; border: 1px solid black; padding: 1em;"> | |

| − | + | '''Example 2.6''': | |

To turn this basis for <math> \mathbb{R}^3 </math> | To turn this basis for <math> \mathbb{R}^3 </math> | ||

| Line 151: | Line 147: | ||

is a basis for the space. | is a basis for the space. | ||

| − | + | </blockquote> | |

The next result verifies that | The next result verifies that | ||

| Line 159: | Line 155: | ||

definition of orthogonality for other vector spaces). | definition of orthogonality for other vector spaces). | ||

| − | + | <blockquote style="background: white; border: 1px solid black; padding: 1em;"> | |

| − | + | '''Theorem 2.7 (Gram-Schmidt orthogonalization)''': | |

| − | If <math> \left\langle \vec{\beta}_1,\ldots\vec{\beta}_k \right\rangle </math> | + | :If <math> \left\langle \vec{\beta}_1,\ldots\vec{\beta}_k \right\rangle </math> |

is a basis for a subspace of <math> \mathbb{R}^n </math> then, where | is a basis for a subspace of <math> \mathbb{R}^n </math> then, where | ||

| Line 181: | Line 177: | ||

the <math> \vec{\kappa}\, </math>'s form an orthogonal basis for the same subspace. | the <math> \vec{\kappa}\, </math>'s form an orthogonal basis for the same subspace. | ||

| − | + | </blockquote> | |

| − | + | Proof: | |

| − | |||

We will use induction to check that each <math> \vec{\kappa}_i </math> is nonzero, | We will use induction to check that each <math> \vec{\kappa}_i </math> is nonzero, | ||

is in the span of <math>\left\langle \vec{\beta}_1,\ldots\vec{\beta}_i \right\rangle </math> | is in the span of <math>\left\langle \vec{\beta}_1,\ldots\vec{\beta}_i \right\rangle </math> | ||

| Line 285: | Line 280: | ||

The check that <math>\vec{\kappa}_3</math> is also | The check that <math>\vec{\kappa}_3</math> is also | ||

orthogonal to the other preceding vector <math>\vec{\kappa}_2</math> is similar. | orthogonal to the other preceding vector <math>\vec{\kappa}_2</math> is similar. | ||

| − | |||

| − | + | Beyond having the vectors in the basis be orthogonal, we can do more; we can arrange for each vector to have length one by dividing each by its own length (we can '''normalize''' the lengths). | |

| − | + | <blockquote style="background: white; border: 1px solid black; padding: 1em;"> | |

| − | + | '''Example 2.8''': | |

| − | Normalizing the length of each vector in the orthogonal basis of | + | Normalizing the length of each vector in the orthogonal basis of Example 2.6 produces this '''orthonormal basis'''. |

| − | |||

| − | produces this '''orthonormal basis'''. | ||

:<math> | :<math> | ||

| Line 302: | Line 294: | ||

\right\rangle | \right\rangle | ||

</math> | </math> | ||

| − | + | </blockquote> | |

Besides its intuitive appeal, and its analogy with the | Besides its intuitive appeal, and its analogy with the | ||

Revision as of 13:56, 8 October 2021

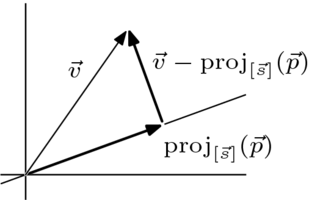

The prior subsection suggests that projecting onto the line spanned by decomposes a vector into two parts

|

that are orthogonal and so are "not interacting". We will now develop that suggestion.

Definition 2.1

- Vectors are mutually orthogonal when any two are orthogonal: if then the dot product is zero.

Theorem 2.2

- If the vectors in a set are mutually orthogonal and nonzero then that set is linearly independent.

Proof: Consider a linear relationship . If then taking the dot product of with both sides of the equation

shows, since is nonzero, that is zero.

Corollary 2.3

- If the vectors in a size subset of a dimensional space are mutually orthogonal and nonzero then that set is a basis for the space.

Proof: Any linearly independent size subset of a dimensional space is a basis.

Of course, the converse of Corollary 2.3 does not hold— not every basis of every subspace of is made of mutually orthogonal vectors. However, we can get the partial converse that for every subspace of there is at least one basis consisting of mutually orthogonal vectors.

Example 2.4: The members and of this basis for are not orthogonal.

However, we can derive from a new basis for the same space that does have mutually orthogonal members. For the first member of the new basis we simply use .

For the second member of the new basis, we take away from its part in the direction of ,

which leaves the part, pictured above, of that is orthogonal to (it is orthogonal by the definition of the projection onto the span of ). Note that, by the corollary, is a basis for .

Definition 2.5:

- An orthogonal basis for a vector space is a basis of mutually orthogonal vectors.

Example 2.6: To turn this basis for

into an orthogonal basis, we take the first vector as it is given.

We get by starting with the given second vector and subtracting away the part of it in the direction of .

Finally, we get by taking the third given vector and subtracting the part of it in the direction of , and also the part of it in the direction of .

Again the corollary gives that

is a basis for the space.

The next result verifies that the process used in those examples works with any basis for any subspace of an (we are restricted to only because we have not given a definition of orthogonality for other vector spaces).

Theorem 2.7 (Gram-Schmidt orthogonalization):

- If

is a basis for a subspace of then, where

the 's form an orthogonal basis for the same subspace.

Proof: We will use induction to check that each is nonzero, is in the span of and is orthogonal to all preceding vectors: . With those, and with Corollary 2.3, we will have that is a basis for the same space as .

We shall cover the cases up to , which give the sense of the argument. Completing the details is Problem 15.

The case is trivial— setting equal to makes it a nonzero vector since is a member of a basis, it is obviously in the desired span, and the "orthogonal to all preceding vectors" condition is vacuously met.

For the case, expand the definition of .

This expansion shows that is nonzero or else this would be a non-trivial linear dependence among the 's (it is nontrivial because the coefficient of is ) and also shows that is in the desired span. Finally, is orthogonal to the only preceding vector

because this projection is orthogonal.

The case is the same as the case except for one detail. As in the case, expanding the definition

shows that is nonzero and is in the span. A calculation shows that is orthogonal to the preceding vector .

(Here's the difference from the case— the second line has two kinds of terms. The first term is zero because this projection is orthogonal, as in the case. The second term is zero because is orthogonal to and so is orthogonal to any vector in the line spanned by .) The check that is also orthogonal to the other preceding vector is similar.

Beyond having the vectors in the basis be orthogonal, we can do more; we can arrange for each vector to have length one by dividing each by its own length (we can normalize the lengths).

Example 2.8: Normalizing the length of each vector in the orthogonal basis of Example 2.6 produces this orthonormal basis.

Besides its intuitive appeal, and its analogy with the standard basis for , an orthonormal basis also simplifies some computations.

Resources

- Gram-Schmidt Orthogonalization, Wikibooks: Linear Algebra

![{\displaystyle \displaystyle {\vec {v}}={\mbox{proj}}_{[{\vec {s}}\,]}({\vec {v}})\,+\,\left({\vec {v}}-{\mbox{proj}}_{[{\vec {s}}\,]}({\vec {v}})\right)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0da7af968d921768d5eef2f763959f0628fe2b2b)

![{\displaystyle i\in [1..k]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bef98d4d493897dca6d5d3c0366c85f70070fb29)

![{\displaystyle \displaystyle {\vec {\kappa }}_{2}={\begin{pmatrix}1\\3\end{pmatrix}}-{\mbox{proj}}_{\scriptstyle [{\vec {\kappa }}_{1}]}({\begin{pmatrix}1\\3\end{pmatrix}})={\begin{pmatrix}1\\3\end{pmatrix}}-{\begin{pmatrix}2\\1\end{pmatrix}}={\begin{pmatrix}-1\\2\end{pmatrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bb4ea1b1e880ad1cf1aaf71487651e6d491b549c)

![{\displaystyle {\vec {\kappa }}_{2}={\begin{pmatrix}0\\2\\0\end{pmatrix}}-{\mbox{proj}}_{[{\vec {\kappa }}_{1}]}({\begin{pmatrix}0\\2\\0\end{pmatrix}})={\begin{pmatrix}0\\2\\0\end{pmatrix}}-{\begin{pmatrix}2/3\\2/3\\2/3\end{pmatrix}}={\begin{pmatrix}-2/3\\4/3\\-2/3\end{pmatrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/efaef6f72068cd400dba6470e842cd8c0e5f4caf)

![{\displaystyle {\vec {\kappa }}_{3}={\begin{pmatrix}1\\0\\3\end{pmatrix}}-{\mbox{proj}}_{[{\vec {\kappa }}_{1}]}({\begin{pmatrix}1\\0\\3\end{pmatrix}})-{\mbox{proj}}_{[{\vec {\kappa }}_{2}]}({\begin{pmatrix}1\\0\\3\end{pmatrix}})={\begin{pmatrix}-1\\0\\1\end{pmatrix}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5305a998b025fa73d4e7c301c77d80a29bce293c)

![{\displaystyle {\begin{array}{rl}{\vec {\kappa }}_{1}&={\vec {\beta }}_{1}\\{\vec {\kappa }}_{2}&={\vec {\beta }}_{2}-{\mbox{proj}}_{[{\vec {\kappa }}_{1}]}({{\vec {\beta }}_{2}})\\{\vec {\kappa }}_{3}&={\vec {\beta }}_{3}-{\mbox{proj}}_{[{\vec {\kappa }}_{1}]}({{\vec {\beta }}_{3}})-{\mbox{proj}}_{[{\vec {\kappa }}_{2}]}({{\vec {\beta }}_{3}})\\&\vdots \\{\vec {\kappa }}_{k}&={\vec {\beta }}_{k}-{\mbox{proj}}_{[{\vec {\kappa }}_{1}]}({{\vec {\beta }}_{k}})-\cdots -{\mbox{proj}}_{[{\vec {\kappa }}_{k-1}]}({{\vec {\beta }}_{k}})\end{array}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f7b81f989d7eaa8606b764dc1db82f5e8c753798)

![{\displaystyle {\vec {\kappa }}_{2}={\vec {\beta }}_{2}-{\mbox{proj}}_{[{\vec {\kappa }}_{1}]}({{\vec {\beta }}_{2}})={\vec {\beta }}_{2}-{\frac {{\vec {\beta }}_{2}\cdot {\vec {\kappa }}_{1}}{{\vec {\kappa }}_{1}\cdot {\vec {\kappa }}_{1}}}\cdot {\vec {\kappa }}_{1}={\vec {\beta }}_{2}-{\frac {{\vec {\beta }}_{2}\cdot {\vec {\kappa }}_{1}}{{\vec {\kappa }}_{1}\cdot {\vec {\kappa }}_{1}}}\cdot {\vec {\beta }}_{1}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/104528b528834b806090892ff62d5ff8d34e848f)

![{\displaystyle {\vec {\kappa }}_{1}\cdot {\vec {\kappa }}_{2}={\vec {\kappa }}_{1}\cdot ({\vec {\beta }}_{2}-{\mbox{proj}}_{[{\vec {\kappa }}_{1}]}({{\vec {\beta }}_{2}}))=0}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3cff7a28fdd5309be9b0afec02f14c5addae41dc)

![{\displaystyle {\begin{array}{rl}{\vec {\kappa }}_{1}\cdot {\vec {\kappa }}_{3}&={\vec {\kappa }}_{1}\cdot {\bigl (}{\vec {\beta }}_{3}-{\mbox{proj}}_{[{\vec {\kappa }}_{1}]}({{\vec {\beta }}_{3}})-{\mbox{proj}}_{[{\vec {\kappa }}_{2}]}({{\vec {\beta }}_{3}}){\bigr )}\\&={\vec {\kappa }}_{1}\cdot {\bigl (}{\vec {\beta }}_{3}-{\mbox{proj}}_{[{\vec {\kappa }}_{1}]}({{\vec {\beta }}_{3}}){\bigr )}-{\vec {\kappa }}_{1}\cdot {\mbox{proj}}_{[{\vec {\kappa }}_{2}]}({{\vec {\beta }}_{3}})\\&=0\end{array}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6fa72b070f98ecf2d0c3c8d3101ec1e34844902c)