Difference between revisions of "The Cross Product"

| (20 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | The cross product is an | + | In mathematics, the '''cross product''' or '''vector product''' (occasionally '''directed area product''', to emphasize its geometric significance) is a binary operation on two vectors in a three-dimensional oriented Euclidean vector space (named here <math>E</math>), and is denoted by the symbol <math>\times</math>. Given two linearly independent vectors {{math|'''a'''}} and {{math|'''b'''}}, the cross product, {{math|'''a''' × '''b'''}} (read "a cross b"), is a vector that is perpendicular to both {{math|'''a'''}} and {{math|'''b'''}}, and thus normal to the plane containing them. It has many applications in mathematics, physics, engineering, and computer programming. It should not be confused with the dot product (projection product). |

| + | |||

| + | If two vectors have the same direction or have the exact opposite direction from each other (that is, they are ''not'' linearly independent), or if either one has zero length, then their cross product is zero. More generally, the magnitude of the product equals the area of a parallelogram with the vectors for sides; in particular, the magnitude of the product of two perpendicular vectors is the product of their lengths. | ||

| + | |||

| + | The cross product is anticommutative (that is, '''a''' × '''b''' = − '''b''' × '''a''') and is distributive over addition (that is, '''a''' × ('''b''' + '''c''') = '''a''' × '''b''' + '''a''' × '''c'''). The space <math>E</math> together with the cross product is an algebra over the real numbers, which is neither commutative nor associative, but is a Lie algebra with the cross product being the Lie bracket. | ||

| + | |||

| + | Like the dot product, it depends on the metric of Euclidean space, but unlike the dot product, it also depends on a choice of orientation (or "handedness") of the space (it's why an oriented space is needed). In connection with the cross product, the exterior product of vectors can be used in arbitrary dimensions (with a bivector or 2-form result) and is independent of the orientation of the space. | ||

| + | |||

| + | The product can be generalized in various ways, using the orientation and metric structure just as for the traditional 3-dimensional cross product, one can, in {{mvar|n}} dimensions, take the product of {{math|''n'' − 1}} vectors to produce a vector perpendicular to all of them. But if the product is limited to non-trivial binary products with vector results, it exists only in three and seven dimensions. | ||

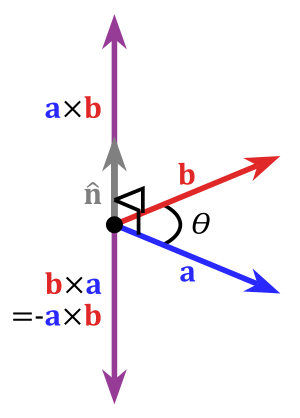

| + | [[File:Cross product vector.svg|thumb|right|The cross product with respect to a right-handed coordinate system]] | ||

| + | |||

| + | == Definition == | ||

| + | [[File:Right hand rule cross product.svg|thumb|Finding the direction of the cross product by the right-hand rule.]] | ||

| + | |||

| + | The cross product of two vectors '''a''' and '''b''' is defined only in three-dimensional space and is denoted by '''a''' × '''b'''. In physics and applied mathematics, the wedge notation '''a''' ∧ '''b''' is often used (in conjunction with the name ''vector product''), although in pure mathematics such notation is usually reserved for just the exterior product, an abstraction of the vector product to {{math|''n''}} dimensions. | ||

| + | |||

| + | The cross product '''a''' × '''b''' is defined as a vector '''c''' that is perpendicular (orthogonal) to both '''a''' and '''b''', with a direction given by the right-hand rule and a magnitude equal to the area of the parallelogram that the vectors span. | ||

| + | |||

| + | The cross product is defined by the formula | ||

| + | |||

| + | :<math>\mathbf{a} \times \mathbf{b} = \left\| \mathbf{a} \right\| \left\| \mathbf{b} \right\| \sin (\theta) \ \mathbf{n}</math> | ||

| + | |||

| + | where: | ||

| + | |||

| + | * ''θ'' is the angle between '''a''' and '''b''' in the plane containing them (hence, it is between 0° and 180°) | ||

| + | * ‖'''a'''‖ and ‖'''b'''‖ are the magnitudes of vectors '''a''' and '''b''' | ||

| + | * and '''n''' is a unit vector perpendicular to the plane containing '''a''' and '''b''', in the direction given by the right-hand rule (illustrated). | ||

| + | |||

| + | If the vectors '''a''' and '''b''' are parallel (that is, the angle ''θ'' between them is either 0° or 180°), by the above formula, the cross product of '''a''' and '''b''' is the zero vector '''0'''. | ||

| + | |||

| + | === Direction === | ||

| + | [[File:Cross product.gif|left|thumb|The cross product '''a''' × '''b''' (vertical, in purple) changes as the angle between the vectors '''a''' (blue) and '''b''' (red) changes. The cross product is always orthogonal to both vectors, and has magnitude zero when the vectors are parallel and maximum magnitude ‖'''a'''‖‖'''b'''‖ when they are orthogonal.]] | ||

| + | |||

| + | By convention, the direction of the vector '''n''' is given by the right-hand rule, where one simply points the forefinger of the right hand in the direction of '''a''' and the middle finger in the direction of '''b'''. Then, the vector '''n''' is coming out of the thumb (see the adjacent picture). Using this rule implies that the cross product is anti-commutative; that is, '''b''' × '''a''' = −('''a''' × '''b'''). By pointing the forefinger toward '''b''' first, and then pointing the middle finger toward '''a''', the thumb will be forced in the opposite direction, reversing the sign of the product vector. | ||

| + | |||

| + | As the cross product operator depends on the orientation of the space (as explicit in the definition above), the cross product of two vectors is not a "true" vector, but a ''pseudovector''. | ||

| + | |||

| + | == Names == | ||

| + | [[File:Sarrus rule.svg|upright=1.25|thumb|right|According to Sarrus's rule, the determinant of a 3×3 matrix involves multiplications between matrix elements identified by crossed diagonals]] | ||

| + | |||

| + | In 1881, Josiah Willard Gibbs, and independently Oliver Heaviside, introduced both the dot product and the cross product using a period ('''a''' . '''b''') and an "x" ('''a''' x '''b'''), respectively, to denote them. | ||

| + | |||

| + | In 1877, to emphasize the fact that the result of a dot product is a scalar while the result of a cross product is a vector, William Kingdon Clifford coined the alternative names '''scalar product''' and '''vector product''' for the two operations. These alternative names are still widely used in the literature. | ||

| + | |||

| + | Both the cross notation ('''a''' × '''b''') and the name '''cross product''' were possibly inspired by the fact that each scalar component of '''a''' × '''b''' is computed by multiplying non-corresponding components of '''a''' and '''b'''. Conversely, a dot product '''a''' ⋅ '''b''' involves multiplications between corresponding components of '''a''' and '''b'''. As explained below, the cross product can be expressed in the form of a determinant of a special 3 × 3 matrix. According to Sarrus's rule, this involves multiplications between matrix elements identified by crossed diagonals. | ||

| + | |||

| + | ==Computing== | ||

| + | |||

| + | ===Coordinate notation=== | ||

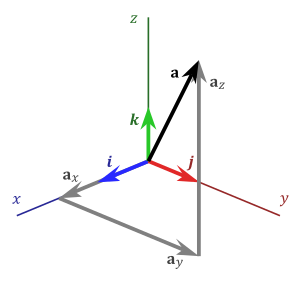

| + | [[File:3D Vector.svg|300px|thumb|right|Standard basis vectors ('''i''', '''j''', '''k''', also denoted '''e'''<sub>1</sub>, '''e'''<sub>2</sub>, '''e'''<sub>3</sub>) and vector components of '''a''' ('''a'''<sub>x</sub>, '''a'''<sub>y</sub>, '''a'''<sub>z</sub>, also denoted '''a'''<sub>1</sub>, '''a'''<sub>2</sub>, '''a'''<sub>3</sub>)]] | ||

| + | If ('''i''', '''j''','''k''') is a positively oriented orthonormal basis, the basis vectors satisfy the following equalities | ||

| + | :<math>\begin{alignat}{2} | ||

| + | \mathbf{\color{blue}{i}}&\times\mathbf{\color{red}{j}} &&= \mathbf{\color{green}{k}}\\ | ||

| + | \mathbf{\color{red}{j}}&\times\mathbf{\color{green}{k}} &&= \mathbf{\color{blue}{i}}\\ | ||

| + | \mathbf{\color{green}{k}}&\times\mathbf{\color{blue}{i}} &&= \mathbf{\color{red}{j}} | ||

| + | \end{alignat}</math> | ||

| + | |||

| + | which imply, by the anticommutativity of the cross product, that | ||

| + | :<math>\begin{alignat}{2} | ||

| + | \mathbf{\color{red}{j}}&\times\mathbf{\color{blue}{i}} &&= -\mathbf{\color{green}{k}}\\ | ||

| + | \mathbf{\color{green}{k}}&\times\mathbf{\color{red}{j}} &&= -\mathbf{\color{blue}{i}}\\ | ||

| + | \mathbf{\color{blue}{i}}&\times\mathbf{\color{green}{k}} &&= -\mathbf{\color{red}{j}} | ||

| + | \end{alignat}</math> | ||

| + | |||

| + | The anticommutativity of the cross product (and the obvious lack of linear independence) also implies that | ||

| + | :<math>\mathbf{\color{blue}{i}}\times\mathbf{\color{blue}{i}} = \mathbf{\color{red}{j}}\times\mathbf{\color{red}{j}} = \mathbf{\color{green}{k}}\times\mathbf{\color{green}{k}} = \mathbf{0}</math> (the zero vector). | ||

| + | |||

| + | These equalities, together with the distributivity and linearity of the cross product (though neither follows easily from the definition given above), are sufficient to determine the cross product of any two vectors '''a''' and '''b'''. Each vector can be defined as the sum of three orthogonal components parallel to the standard basis vectors: | ||

| + | :<math>\begin{alignat}{3} | ||

| + | \mathbf{a} &= a_1\mathbf{\color{blue}{i}} &&+ a_2\mathbf{\color{red}{j}} &&+ a_3\mathbf{\color{green}{k}} \\ | ||

| + | \mathbf{b} &= b_1\mathbf{\color{blue}{i}} &&+ b_2\mathbf{\color{red}{j}} &&+ b_3\mathbf{\color{green}{k}} | ||

| + | \end{alignat}</math> | ||

| + | |||

| + | Their cross product '''a''' × '''b''' can be expanded using distributivity: | ||

| + | :<math> \begin{align} | ||

| + | \mathbf{a}\times\mathbf{b} = {} &(a_1\mathbf{\color{blue}{i}} + a_2\mathbf{\color{red}{j}} + a_3\mathbf{\color{green}{k}}) \times (b_1\mathbf{\color{blue}{i}} + b_2\mathbf{\color{red}{j}} + b_3\mathbf{\color{green}{k}})\\ | ||

| + | = {} &a_1b_1(\mathbf{\color{blue}{i}} \times \mathbf{\color{blue}{i}}) + a_1b_2(\mathbf{\color{blue}{i}} \times \mathbf{\color{red}{j}}) + a_1b_3(\mathbf{\color{blue}{i}} \times \mathbf{\color{green}{k}}) + {}\\ | ||

| + | &a_2b_1(\mathbf{\color{red}{j}} \times \mathbf{\color{blue}{i}}) + a_2b_2(\mathbf{\color{red}{j}} \times \mathbf{\color{red}{j}}) + a_2b_3(\mathbf{\color{red}{j}} \times \mathbf{\color{green}{k}}) + {}\\ | ||

| + | &a_3b_1(\mathbf{\color{green}{k}} \times \mathbf{\color{blue}{i}}) + a_3b_2(\mathbf{\color{green}{k}} \times \mathbf{\color{red}{j}}) + a_3b_3(\mathbf{\color{green}{k}} \times \mathbf{\color{green}{k}})\\ | ||

| + | \end{align}</math> | ||

| + | |||

| + | This can be interpreted as the decomposition of '''a''' × '''b''' into the sum of nine simpler cross products involving vectors aligned with '''i''', '''j''', or '''k'''. Each one of these nine cross products operates on two vectors that are easy to handle as they are either parallel or orthogonal to each other. From this decomposition, by using the above-mentioned equalities and collecting similar terms, we obtain: | ||

| + | :<math>\begin{align} | ||

| + | \mathbf{a}\times\mathbf{b} = {} &\quad\ a_1b_1\mathbf{0} + a_1b_2\mathbf{\color{green}{k}} - a_1b_3\mathbf{\color{red}{j}} \\ | ||

| + | &- a_2b_1\mathbf{\color{green}{k}} + a_2b_2\mathbf{0} + a_2b_3\mathbf{\color{blue}{i}} \\ | ||

| + | &+ a_3b_1\mathbf{\color{red}{j}}\ - a_3b_2\mathbf{\color{blue}{i}}\ + a_3b_3\mathbf{0} \\ | ||

| + | = {} &(a_2b_3 - a_3b_2)\mathbf{\color{blue}{i}} + (a_3b_1 - a_1b_3)\mathbf{\color{red}{j}} + (a_1b_2 - a_2b_1)\mathbf{\color{green}{k}}\\ | ||

| + | \end{align}</math> | ||

| + | |||

| + | meaning that the three scalar components of the resulting vector '''s''' = ''s''<sub>1</sub>'''i''' + ''s''<sub>2</sub>'''j''' + ''s''<sub>3</sub>'''k''' = '''a''' × '''b''' are | ||

| + | :<math>\begin{align} | ||

| + | s_1 &= a_2b_3-a_3b_2\\ | ||

| + | s_2 &= a_3b_1-a_1b_3\\ | ||

| + | s_3 &= a_1b_2-a_2b_1 | ||

| + | \end{align}</math> | ||

| + | |||

| + | Using column vectors, we can represent the same result as follows: | ||

| + | :<math>\begin{bmatrix}s_1\\s_2\\s_3\end{bmatrix}=\begin{bmatrix}a_2b_3-a_3b_2\\a_3b_1-a_1b_3\\a_1b_2-a_2b_1\end{bmatrix}</math> | ||

| + | |||

| + | ===Matrix notation=== | ||

| + | [[File:Sarrus_rule_cross_product_ab.svg|thumb|Use of Sarrus's rule to find the cross product of '''a''' and '''b''']] | ||

| + | The cross product can also be expressed as the formal determinant: | ||

| + | |||

| + | :<math>\mathbf{a\times b} = \begin{vmatrix} | ||

| + | \mathbf{i}&\mathbf{j}&\mathbf{k}\\ | ||

| + | a_1&a_2&a_3\\ | ||

| + | b_1&b_2&b_3\\ | ||

| + | \end{vmatrix}</math> | ||

| + | |||

| + | This determinant can be computed using Sarrus's rule or cofactor expansion. Using Sarrus's rule, it expands to | ||

| + | :<math>\begin{align} | ||

| + | \mathbf{a\times b} &=(a_2b_3\mathbf{i}+a_3b_1\mathbf{j}+a_1b_2\mathbf{k}) - (a_3b_2\mathbf{i}+a_1b_3\mathbf{j}+a_2b_1\mathbf{k})\\ | ||

| + | &=(a_2b_3 - a_3b_2)\mathbf{i} +(a_3b_1 - a_1b_3)\mathbf{j} +(a_1b_2 - a_2b_1)\mathbf{k}. | ||

| + | \end{align}</math> | ||

| + | |||

| + | Using cofactor expansion along the first row instead, it expands to | ||

| + | :<math>\begin{align} | ||

| + | \mathbf{a\times b} &= | ||

| + | \begin{vmatrix} | ||

| + | a_2&a_3\\ | ||

| + | b_2&b_3 | ||

| + | \end{vmatrix}\mathbf{i} - | ||

| + | \begin{vmatrix} | ||

| + | a_1&a_3\\ | ||

| + | b_1&b_3 | ||

| + | \end{vmatrix}\mathbf{j} + | ||

| + | \begin{vmatrix} | ||

| + | a_1&a_2\\ | ||

| + | b_1&b_2 | ||

| + | \end{vmatrix}\mathbf{k} \\ | ||

| + | |||

| + | &=(a_2b_3 - a_3b_2)\mathbf{i} -(a_1b_3 - a_3b_1)\mathbf{j} +(a_1b_2 - a_2b_1)\mathbf{k}, | ||

| + | \end{align}</math> | ||

| + | which gives the components of the resulting vector directly. | ||

| + | |||

| + | === Using Levi-Civita tensors === | ||

| + | * In any basis, the cross-product <math>a \times b</math> is given by the tensorial formula <math>E_{ijk}a^ib^j</math> where <math>E_{ijk} </math> is the covariant Levi-Civita tensor (we note the position of the indices). That corresponds to the intrinsic formula given here. | ||

| + | * In an orthonormal basis '''having the same orientation as the space''', <math>a \times b</math> is given by the pseudo-tensorial formula <math> \varepsilon_{ijk}a^ib^j</math> where <math>\varepsilon_{ijk}</math> is the Levi-Civita symbol (which is a pseudo-tensor). That’s the formula used for everyday physics but it works only for this special choice of basis. | ||

| + | * In any orthonormal basis, <math>a \times b</math> is given by the pseudo-tensorial formula <math>(-1)^B\varepsilon_{ijk}a^ib^j</math> where <math>(-1)^B = \pm 1</math> indicates whether the basis has the same orientation as the space or not. | ||

| + | |||

| + | The latter formula avoids having to change the orientation of the space when we inverse an orthonormal basis. | ||

| + | |||

| + | == Properties == | ||

| + | |||

| + | === Geometric meaning === | ||

| + | [[File:Cross product parallelogram.svg|right|thumb|Figure 1. The area of a parallelogram as the magnitude of a cross product]] | ||

| + | [[File:Parallelepiped volume.svg|right|thumb|240px|Figure 2. Three vectors defining a parallelepiped]] | ||

| + | |||

| + | The magnitude of the cross product can be interpreted as the positive area of the parallelogram having '''a''' and '''b''' as sides (see Figure 1): | ||

| + | |||

| + | :<math> \left\| \mathbf{a} \times \mathbf{b} \right\| = \left\| \mathbf{a} \right\| \left\| \mathbf{b} \right\| | \sin \theta | .</math> | ||

| + | |||

| + | Indeed, one can also compute the volume ''V'' of a parallelepiped having '''a''', '''b''' and '''c''' as edges by using a combination of a cross product and a dot product, called scalar triple product (see Figure 2): | ||

| + | |||

| + | :<math> | ||

| + | \mathbf{a}\cdot(\mathbf{b}\times \mathbf{c})= | ||

| + | \mathbf{b}\cdot(\mathbf{c}\times \mathbf{a})= | ||

| + | \mathbf{c}\cdot(\mathbf{a}\times \mathbf{b}). | ||

| + | </math> | ||

| + | |||

| + | Since the result of the scalar triple product may be negative, the volume of the parallelepiped is given by its absolute value: | ||

| + | |||

| + | :<math>V = |\mathbf{a} \cdot (\mathbf{b} \times \mathbf{c})|.</math> | ||

| + | |||

| + | Because the magnitude of the cross product goes by the sine of the angle between its arguments, the cross product can be thought of as a measure of ''perpendicularity'' in the same way that the dot product is a measure of ''parallelism''. Given two unit vectors, their cross product has a magnitude of 1 if the two are perpendicular and a magnitude of zero if the two are parallel. The dot product of two unit vectors behaves just oppositely: it is zero when the unit vectors are perpendicular and 1 if the unit vectors are parallel. | ||

| + | |||

| + | Unit vectors enable two convenient identities: the dot product of two unit vectors yields the cosine (which may be positive or negative) of the angle between the two unit vectors. The magnitude of the cross product of the two unit vectors yields the sine (which will always be positive). | ||

| + | |||

| + | === Algebraic properties === | ||

| + | |||

| + | [[File:Cross product scalar multiplication.svg|350px|thumb|Cross product scalar multiplication. '''Left:''' Decomposition of '''b''' into components parallel and perpendicular to '''a'''. Right: Scaling of the perpendicular components by a positive real number ''r'' (if negative, '''b''' and the cross product are reversed).]] | ||

| + | |||

| + | [[File:Cross product distributivity.svg|350px|thumb|Cross product distributivity over vector addition. '''Left:''' The vectors '''b''' and '''c''' are resolved into parallel and perpendicular components to '''a'''. '''Right:''' The parallel components vanish in the cross product, only the perpendicular components shown in the plane perpendicular to '''a''' remain.]] | ||

| + | |||

| + | [[File:Cross product triple.svg|thumb|350px|The two nonequivalent triple cross products of three vectors '''a''', '''b''', '''c'''. In each case, two vectors define a plane, the other is out of the plane and can be split into parallel and perpendicular components to the cross product of the vectors defining the plane. These components can be found by vector projection and rejection. The triple product is in the plane and is rotated as shown.]] | ||

| + | |||

| + | If the cross product of two vectors is the zero vector (that is, '''a''' × '''b''' = '''0'''), then either one or both of the inputs is the zero vector, ('''a''' = '''0''' or '''b''' = '''0''') or else they are parallel or antiparallel ('''a''' ∥ '''b''') so that the sine of the angle between them is zero (''θ'' = 0° or ''θ'' = 180° and sin ''θ'' = 0). | ||

| + | |||

| + | The self cross product of a vector is the zero vector: | ||

| + | :<math>\mathbf{a} \times \mathbf{a} = \mathbf{0}.</math> | ||

| + | The cross product is anticommutative, | ||

| + | :<math>\mathbf{a} \times \mathbf{b} = -(\mathbf{b} \times \mathbf{a}),</math> | ||

| + | distributive over addition, | ||

| + | : <math>\mathbf{a} \times (\mathbf{b} + \mathbf{c}) = (\mathbf{a} \times \mathbf{b}) + (\mathbf{a} \times \mathbf{c}),</math> | ||

| + | and compatible with scalar multiplication so that | ||

| + | :<math>(r\,\mathbf{a}) \times \mathbf{b} = \mathbf{a} \times (r\,\mathbf{b}) = r\,(\mathbf{a} \times \mathbf{b}).</math> | ||

| + | |||

| + | It is not associative, but satisfies the Jacobi identity: | ||

| + | :<math>\mathbf{a} \times (\mathbf{b} \times \mathbf{c}) + \mathbf{b} \times (\mathbf{c} \times \mathbf{a}) + \mathbf{c} \times (\mathbf{a} \times \mathbf{b}) = \mathbf{0}.</math> | ||

| + | Distributivity, linearity and Jacobi identity show that the '''R'''<sup>3</sup> vector space together with vector addition and the cross product forms a Lie algebra, the Lie algebra of the real orthogonal group in 3 dimensions, SO(3). | ||

| + | The cross product does not obey the cancellation law; that is, '''a''' × '''b''' = '''a''' × '''c''' with '''a''' ≠ '''0''' does not imply '''b''' = '''c''', but only that: | ||

| + | :<math> \begin{align} | ||

| + | \mathbf{0} &= (\mathbf{a} \times \mathbf{b}) - (\mathbf{a} \times \mathbf{c})\\ | ||

| + | &= \mathbf{a} \times (\mathbf{b} - \mathbf{c}).\\ | ||

| + | \end{align}</math> | ||

| + | |||

| + | This can be the case where '''b''' and '''c''' cancel, but additionally where '''a''' and '''b''' − '''c''' are parallel; that is, they are related by a scale factor ''t'', leading to: | ||

| + | :<math>\mathbf{c} = \mathbf{b} + t\,\mathbf{a},</math> | ||

| + | for some scalar ''t''. | ||

| + | |||

| + | If, in addition to '''a''' × '''b''' = '''a''' × '''c''' and '''a''' ≠ '''0''' as above, it is the case that '''a''' ⋅ '''b''' = '''a''' ⋅ '''c''' then | ||

| + | :<math>\begin{align} | ||

| + | \mathbf{a} \times (\mathbf{b} - \mathbf{c}) &= \mathbf{0} \\ | ||

| + | \mathbf{a} \cdot (\mathbf{b} - \mathbf{c}) &= 0, | ||

| + | \end{align}</math> | ||

| + | As '''b''' − '''c''' cannot be simultaneously parallel (for the cross product to be '''0''') and perpendicular (for the dot product to be 0) to '''a''', it must be the case that '''b''' and '''c''' cancel: '''b''' = '''c'''. | ||

| + | |||

| + | From the geometrical definition, the cross product is invariant under proper rotations about the axis defined by '''a''' × '''b'''. In formulae: | ||

| + | :<math>(R\mathbf{a}) \times (R\mathbf{b}) = R(\mathbf{a} \times \mathbf{b})</math>, where <math>R</math> is a rotation matrix with <math>\det(R)=1</math>. | ||

| + | |||

| + | More generally, the cross product obeys the following identity under matrix transformations: | ||

| + | :<math>(M\mathbf{a}) \times (M\mathbf{b}) = (\det M) \left(M^{-1}\right)^\mathrm{T}(\mathbf{a} \times \mathbf{b}) = \operatorname{cof} M (\mathbf{a} \times \mathbf{b}) </math> | ||

| + | where <math>M</math> is a 3-by-3 matrix and <math>\left(M^{-1}\right)^\mathrm{T}</math> is the transpose of the inverse and <math>\operatorname{cof}</math> is the cofactor matrix. It can be readily seen how this formula reduces to the former one if <math>M</math> is a rotation matrix. | ||

| + | |||

| + | The cross product of two vectors lies in the null space of the 2 × 3 matrix with the vectors as rows: | ||

| + | :<math>\mathbf{a} \times \mathbf{b} \in NS\left(\begin{bmatrix}\mathbf{a} \\ \mathbf{b}\end{bmatrix}\right).</math> | ||

| + | For the sum of two cross products, the following identity holds: | ||

| + | :<math>\mathbf{a} \times \mathbf{b} + \mathbf{c} \times \mathbf{d} = (\mathbf{a} - \mathbf{c}) \times (\mathbf{b} - \mathbf{d}) + \mathbf{a} \times \mathbf{d} + \mathbf{c} \times \mathbf{b}.</math> | ||

| + | |||

| + | === Differentiation === | ||

| + | |||

| + | The product rule of differential calculus applies to any bilinear operation, and therefore also to the cross product: | ||

| + | :<math>\frac{d}{dt}(\mathbf{a} \times \mathbf{b}) = \frac{d\mathbf{a}}{dt} \times \mathbf{b} + \mathbf{a} \times \frac{d\mathbf{b}}{dt} ,</math> | ||

| + | |||

| + | where '''a''' and '''b''' are vectors that depend on the real variable ''t''. | ||

| + | |||

| + | === Triple product expansion === | ||

| + | |||

| + | The cross product is used in both forms of the triple product. The scalar triple product of three vectors is defined as | ||

| + | |||

| + | :<math>\mathbf{a} \cdot (\mathbf{b} \times \mathbf{c}), </math> | ||

| + | |||

| + | It is the signed volume of the parallelepiped with edges '''a''', '''b''' and '''c''' and as such the vectors can be used in any order that's an even permutation of the above ordering. The following therefore are equal: | ||

| + | |||

| + | :<math>\mathbf{a} \cdot (\mathbf{b} \times \mathbf{c}) = \mathbf{b} \cdot (\mathbf{c} \times \mathbf{a}) = \mathbf{c} \cdot (\mathbf{a} \times \mathbf{b}), </math> | ||

| + | |||

| + | The vector triple product is the cross product of a vector with the result of another cross product, and is related to the dot product by the following formula | ||

| + | |||

| + | :<math>\mathbf{a} \times (\mathbf{b} \times \mathbf{c}) = \mathbf{b}(\mathbf{a} \cdot \mathbf{c}) - \mathbf{c}(\mathbf{a} \cdot \mathbf{b}).</math> | ||

| + | |||

| + | The mnemonic "BAC minus CAB" is used to remember the order of the vectors in the right hand member. This formula is used in physics to simplify vector calculations. A special case, regarding gradients and useful in vector calculus, is | ||

| + | :<math>\begin{align} | ||

| + | \nabla \times (\nabla \times \mathbf{f}) &= \nabla (\nabla \cdot \mathbf{f} ) - (\nabla \cdot \nabla) \mathbf{f} \\ | ||

| + | &= \nabla (\nabla \cdot \mathbf{f} ) - \nabla^2 \mathbf{f},\\ | ||

| + | \end{align}</math> | ||

| + | |||

| + | where ∇<sup>2</sup> is the vector Laplacian operator. | ||

| + | |||

| + | Other identities relate the cross product to the scalar triple product: | ||

| + | :<math>\begin{align} | ||

| + | (\mathbf{a}\times \mathbf{b})\times (\mathbf{a}\times \mathbf{c}) &= (\mathbf{a}\cdot(\mathbf{b}\times \mathbf{c})) \mathbf{a} \\ | ||

| + | (\mathbf{a}\times \mathbf{b})\cdot(\mathbf{c}\times \mathbf{d}) &= \mathbf{b}^\mathrm{T} \left( \left( \mathbf{c}^\mathrm{T} \mathbf{a}\right)I - \mathbf{c} \mathbf{a}^\mathrm{T} \right) \mathbf{d}\\ &= (\mathbf{a}\cdot \mathbf{c})(\mathbf{b}\cdot \mathbf{d})-(\mathbf{a}\cdot \mathbf{d}) (\mathbf{b}\cdot \mathbf{c}) | ||

| + | \end{align}</math> | ||

| + | |||

| + | where ''I'' is the identity matrix. | ||

| + | |||

| + | ===Alternative formulation=== | ||

| + | The cross product and the dot product are related by: | ||

| + | :<math> \left\| \mathbf{a} \times \mathbf{b} \right\| ^2 = \left\| \mathbf{a}\right\|^2 \left\|\mathbf{b}\right\|^2 - (\mathbf{a} \cdot \mathbf{b})^2 .</math> | ||

| + | |||

| + | The right-hand side is the Gram determinant of '''a''' and '''b''', the square of the area of the parallelogram defined by the vectors. This condition determines the magnitude of the cross product. Namely, since the dot product is defined, in terms of the angle ''θ'' between the two vectors, as: | ||

| + | |||

| + | :<math> \mathbf{a \cdot b} = \left\| \mathbf a \right\| \left\| \mathbf b \right\| \cos \theta , </math> | ||

| + | |||

| + | the above given relationship can be rewritten as follows: | ||

| + | |||

| + | :<math> \left\| \mathbf{a \times b} \right\|^2 = \left\| \mathbf{a} \right\| ^2 \left\| \mathbf{b}\right \| ^2 \left(1-\cos^2 \theta \right) .</math> | ||

| + | |||

| + | Invoking the Pythagorean trigonometric identity one obtains: | ||

| + | :<math> \left\| \mathbf{a} \times \mathbf{b} \right\| = \left\| \mathbf{a} \right\| \left\| \mathbf{b} \right\| \left| \sin \theta \right| ,</math> | ||

| + | |||

| + | which is the magnitude of the cross product expressed in terms of ''θ'', equal to the area of the parallelogram defined by '''a''' and '''b'''. | ||

| + | |||

| + | The combination of this requirement and the property that the cross product be orthogonal to its constituents '''a''' and '''b''' provides an alternative definition of the cross product. | ||

| + | |||

| + | ===Lagrange's identity=== | ||

| + | The relation: | ||

| + | :<math> | ||

| + | \left\| \mathbf{a} \times \mathbf{b} \right\|^2 \equiv | ||

| + | \det \begin{bmatrix} | ||

| + | \mathbf{a} \cdot \mathbf{a} & \mathbf{a} \cdot \mathbf{b} \\ | ||

| + | \mathbf{a} \cdot \mathbf{b} & \mathbf{b} \cdot \mathbf{b}\\ | ||

| + | \end{bmatrix} \equiv | ||

| + | \left\| \mathbf{a} \right\| ^2 \left\| \mathbf{b} \right\| ^2 - (\mathbf{a} \cdot \mathbf{b})^2 . | ||

| + | </math> | ||

| + | |||

| + | can be compared with another relation involving the right-hand side, namely Lagrange's identity expressed as: | ||

| + | |||

| + | :<math> | ||

| + | \sum_{1 \le i < j \le n} \left( a_ib_j - a_jb_i \right)^2 \equiv | ||

| + | \left\| \mathbf a \right\|^2 \left\| \mathbf b \right\|^2 - ( \mathbf{a \cdot b } )^2\ , | ||

| + | </math> | ||

| + | |||

| + | where '''a''' and '''b''' may be ''n''-dimensional vectors. This also shows that the Riemannian volume form for surfaces is exactly the surface element from vector calculus. In the case where ''n'' = 3, combining these two equations results in the expression for the magnitude of the cross product in terms of its components: | ||

| + | |||

| + | :<math>\begin{align} | ||

| + | &\left\|\mathbf{a} \times \mathbf{b}\right\|^2 \equiv \sum_{1 \le i < j \le 3}\left(a_ib_j - a_jb_i \right)^2 \\ | ||

| + | \equiv{} &\left(a_1b_2 - b_1a_2\right)^2 + \left(a_2b_3 - a_3b_2\right)^2 + \left(a_3b_1 - a_1b_3\right)^2 \ . | ||

| + | \end{align}</math> | ||

| + | |||

| + | The same result is found directly using the components of the cross product found from: | ||

| + | :<math>\mathbf{a} \times \mathbf{b} \equiv \det \begin{bmatrix} | ||

| + | \hat\mathbf{i} & \hat\mathbf{j} & \hat\mathbf{k} \\ | ||

| + | a_1 & a_2 & a_3 \\ | ||

| + | b_1 & b_2 & b_3 \\ | ||

| + | \end{bmatrix}.</math> | ||

| + | |||

| + | In '''R'''<sup>3</sup>, Lagrange's equation is a special case of the multiplicativity |'''vw'''| = |'''v'''||'''w'''| of the norm in the quaternion algebra. | ||

| + | |||

| + | It is a special case of another formula, also sometimes called Lagrange's identity, which is the three dimensional case of the Binet–Cauchy identity: | ||

| + | |||

| + | :<math> | ||

| + | (\mathbf{a} \times \mathbf{b}) \cdot (\mathbf{c} \times \mathbf{d}) \equiv | ||

| + | (\mathbf{a} \cdot \mathbf{c})(\mathbf{b} \cdot \mathbf{d}) - (\mathbf{a} \cdot \mathbf{d})(\mathbf{b} \cdot \mathbf{c}). | ||

| + | </math> | ||

| + | |||

| + | If '''a''' = '''c''' and '''b''' = '''d''' this simplifies to the formula above. | ||

| + | |||

| + | ===Infinitesimal generators of rotations=== | ||

| + | The cross product conveniently describes the infinitesimal generators of rotations in '''R'''<sup>3</sup>. Specifically, if '''n''' is a unit vector in '''R'''<sup>3</sup> and ''R''(''φ'', '''n''') denotes a rotation about the axis through the origin specified by '''n''', with angle φ (measured in radians, counterclockwise when viewed from the tip of '''n'''), then | ||

| + | :<math>\left.{d\over d\phi} \right|_{\phi=0} R(\phi,\boldsymbol{n}) \boldsymbol{x} = \boldsymbol{n} \times \boldsymbol{x}</math> | ||

| + | for every vector '''x''' in '''R'''<sup>3</sup>. The cross product with '''n''' therefore describes the infinitesimal generator of the rotations about '''n'''. These infinitesimal generators form the Lie algebra '''so'''(3) of the rotation group SO(3), and we obtain the result that the Lie algebra '''R'''<sup>3</sup> with cross product is isomorphic to the Lie algebra '''so'''(3). | ||

| + | |||

| + | == Alternative ways to compute == | ||

| + | |||

| + | === Conversion to matrix multiplication === | ||

| + | The vector cross product also can be expressed as the product of a skew-symmetric matrix and a vector: | ||

| + | :<math>\begin{align} | ||

| + | \mathbf{a} \times \mathbf{b} = [\mathbf{a}]_{\times} \mathbf{b} | ||

| + | &= \begin{bmatrix}\,0&\!-a_3&\,\,a_2\\ \,\,a_3&0&\!-a_1\\-a_2&\,\,a_1&\,0\end{bmatrix}\begin{bmatrix}b_1\\b_2\\b_3\end{bmatrix} \\ | ||

| + | \mathbf{a} \times \mathbf{b} = {[\mathbf{b}]_\times}^\mathrm{\!\!T} \mathbf{a} | ||

| + | &= \begin{bmatrix}\,0&\,\,b_3&\!-b_2\\ -b_3&0&\,\,b_1\\\,\,b_2&\!-b_1&\,0\end{bmatrix}\begin{bmatrix}a_1\\a_2\\a_3\end{bmatrix}, | ||

| + | \end{align}</math> | ||

| + | |||

| + | where superscript <sup>T</sup> refers to the transpose operation, and ['''a''']<sub>×</sub> is defined by: | ||

| + | |||

| + | :<math>[\mathbf{a}]_{\times} \stackrel{\rm def}{=} \begin{bmatrix}\,\,0&\!-a_3&\,\,\,a_2\\\,\,\,a_3&0&\!-a_1\\\!-a_2&\,\,a_1&\,\,0\end{bmatrix}.</math> | ||

| + | |||

| + | The columns ['''a''']<sub>×,i</sub> of the skew-symmetric matrix for a vector '''a''' can be also obtained by calculating the cross product with unit vectors. That is, | ||

| + | |||

| + | :<math>[\mathbf{a}]_{\times, i} = \mathbf{a} \times \mathbf{\hat{e}_i}, \; i\in \{1,2,3\} </math> | ||

| + | |||

| + | or | ||

| + | |||

| + | :<math>[\mathbf{a}]_{\times} = \sum_{i=1}^3\left(\mathbf{a} \times \mathbf{\hat{e}_i}\right)\otimes\mathbf{\hat{e}_i},</math> | ||

| + | |||

| + | where <math>\otimes</math> is the outer product operator. | ||

| + | |||

| + | Also, if '''a''' is itself expressed as a cross product: | ||

| + | |||

| + | :<math>\mathbf{a} = \mathbf{c} \times \mathbf{d}</math> | ||

| + | |||

| + | then | ||

| + | |||

| + | :<math>[\mathbf{a}]_{\times} = \mathbf{d}\mathbf{c}^\mathrm{T} - \mathbf{c}\mathbf{d}^\mathrm{T} .</math> | ||

| + | |||

| + | <blockquote style="background: white; border: 1px solid black; padding: 1em;"> | ||

| + | '''Proof by substitution''' | ||

| + | |||

| + | Evaluation of the cross product gives | ||

| + | :<math> \mathbf{a} = \mathbf{c} \times \mathbf{d} = \begin{pmatrix} | ||

| + | c_2 d_3 - c_3 d_2 \\ | ||

| + | c_3 d_1 - c_1 d_3 \\ | ||

| + | c_1 d_2 - c_2 d_1 \end{pmatrix} | ||

| + | </math> | ||

| + | Hence, the left hand side equals | ||

| + | :<math> [\mathbf{a}]_{\times} = \begin{bmatrix} | ||

| + | 0 & c_2 d_1 - c_1 d_2 & c_3 d_1 - c_1 d_3 \\ | ||

| + | c_1 d_2 - c_2 d_1 & 0 & c_3 d_2 - c_2 d_3 \\ | ||

| + | c_1 d_3 - c_3 d_1 & c_2 d_3 - c_3 d_2 & 0 \end{bmatrix} | ||

| + | </math> | ||

| + | Now, for the right hand side, | ||

| + | :<math> \mathbf{c} \mathbf{d}^{\mathrm T} = \begin{bmatrix} | ||

| + | c_1 d_1 & c_1 d_2 & c_1 d_3 \\ | ||

| + | c_2 d_1 & c_2 d_2 & c_2 d_3 \\ | ||

| + | c_3 d_1 & c_3 d_2 & c_3 d_3 \end{bmatrix} | ||

| + | </math> | ||

| + | And its transpose is | ||

| + | :<math> \mathbf{d} \mathbf{c}^{\mathrm T} = \begin{bmatrix} | ||

| + | c_1 d_1 & c_2 d_1 & c_3 d_1 \\ | ||

| + | c_1 d_2 & c_2 d_2 & c_3 d_2 \\ | ||

| + | c_1 d_3 & c_2 d_3 & c_3 d_3 \end{bmatrix} | ||

| + | </math> | ||

| + | Evaluation of the right hand side gives | ||

| + | :<math> \mathbf{d} \mathbf{c}^{\mathrm T} - | ||

| + | \mathbf{c} \mathbf{d}^{\mathrm T} = \begin{bmatrix} | ||

| + | 0 & c_2 d_1 - c_1 d_2 & c_3 d_1 - c_1 d_3 \\ | ||

| + | c_1 d_2 - c_2 d_1 & 0 & c_3 d_2 - c_2 d_3 \\ | ||

| + | c_1 d_3 - c_3 d_1 & c_2 d_3 - c_3 d_2 & 0 \end{bmatrix} | ||

| + | </math> | ||

| + | Comparison shows that the left hand side equals the right hand side. | ||

| + | </blockquote> | ||

| + | |||

| + | This result can be generalized to higher dimensions using geometric algebra. In particular in any dimension bivectors can be identified with skew-symmetric matrices, so the product between a skew-symmetric matrix and vector is equivalent to the grade-1 part of the product of a bivector and vector. In three dimensions bivectors are dual to vectors so the product is equivalent to the cross product, with the bivector instead of its vector dual. In higher dimensions the product can still be calculated but bivectors have more degrees of freedom and are not equivalent to vectors. | ||

| + | |||

| + | This notation is also often much easier to work with, for example, in epipolar geometry. | ||

| + | |||

| + | From the general properties of the cross product follows immediately that | ||

| + | |||

| + | :<math>[\mathbf{a}]_{\times} \, \mathbf{a} = \mathbf{0}</math> and <math>\mathbf{a}^\mathrm T \, [\mathbf{a}]_{\times} = \mathbf{0}</math> | ||

| + | |||

| + | and from fact that ['''a''']<sub>×</sub> is skew-symmetric it follows that | ||

| + | |||

| + | :<math>\mathbf{b}^\mathrm T \, [\mathbf{a}]_{\times} \, \mathbf{b} = 0. </math> | ||

| + | |||

| + | The above-mentioned triple product expansion (bac–cab rule) can be easily proven using this notation. | ||

| + | |||

| + | As mentioned above, the Lie algebra '''R'''<sup>3</sup> with cross product is isomorphic to the Lie algebra '''so(3)''', whose elements can be identified with the 3×3 skew-symmetric matrices. The map '''a''' → ['''a''']<sub>×</sub> provides an isomorphism between '''R'''<sup>3</sup> and '''so(3)'''. Under this map, the cross product of 3-vectors corresponds to the commutator of 3x3 skew-symmetric matrices. | ||

| + | |||

| + | <blockquote style="background: white; border: 1px solid black; padding: 1em;"> | ||

| + | '''Matrix conversion for cross product with canonical base vectors''' | ||

| + | |||

| + | Denoting with <math>\mathbf{e}_i \in \mathbf{R}^{3 \times 1}</math> the <math>i</math>-th canonical base vector, the cross product of a generic vector <math>\mathbf{v} \in \mathbf{R}^{3 \times 1}</math> with <math>\mathbf{e}_i</math> is given by: <math>\mathbf{v} \times \mathbf{e}_i = \mathbf{C}_i \mathbf{v}</math>, where | ||

| + | |||

| + | <math> \mathbf{C}_1 = \begin{bmatrix} 0 & 0 & 0 \\ 0 & 0 & 1 \\ 0 & -1 & 0 \end{bmatrix}, \quad | ||

| + | \mathbf{C}_2 = \begin{bmatrix} 0 & 0 & -1 \\ 0 & 0 & 0 \\ 1 & 0 & 0 \end{bmatrix}, \quad | ||

| + | \mathbf{C}_3 = \begin{bmatrix} 0 & 1 & 0 \\ -1 & 0 & 0 \\ 0 & 0 & 0 \end{bmatrix} | ||

| + | </math> | ||

| + | |||

| + | These matrices share the following properties: | ||

| + | * <math>\mathbf{C}_i^\textrm{T} = -\mathbf{C}_i</math> (skew-symmetric); | ||

| + | * Both trace and determinant are zero; | ||

| + | * <math>\text{rank}(\mathbf{C}_i) = 2</math>; | ||

| + | * <math>\mathbf{C}_i \mathbf{C}_i^\textrm{T} = \mathbf{P}^{ ^\perp}_{\mathbf{e}_i}</math> (see below); | ||

| + | |||

| + | The orthogonal projection matrix of a vector <math>\mathbf{v} \neq \mathbf{0}</math> is given by <math>\mathbf{P}_{\mathbf{v}} = \mathbf{v}\left(\mathbf{v}^\textrm{T} \mathbf{v}\right)^{-1} \mathbf{v}^T</math>. The projection matrix onto the orthogonal complement is given by <math>\mathbf{P}^{ ^\perp}_{\mathbf{v}} = \mathbf{I} - \mathbf{P}_{\mathbf{v}}</math>, where <math>\mathbf{I}</math> is the identity matrix. For the special case of <math>\mathbf{v} = \mathbf{e}_i</math>, it can be verified that | ||

| + | |||

| + | <math> \mathbf{P}^{^\perp}_{\mathbf{e}_1} = \begin{bmatrix} 0 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix}, \quad | ||

| + | \mathbf{P}^{ ^\perp}_{\mathbf{e}_2} = \begin{bmatrix} 1 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 1 \end{bmatrix}, \quad | ||

| + | \mathbf{P}^{ ^\perp}_{\mathbf{e}_3} = \begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 0 \end{bmatrix} | ||

| + | </math> | ||

| + | |||

| + | For other properties of orthogonal projection matrices, see projection (linear algebra). | ||

| + | </blockquote> | ||

| + | |||

| + | ===Index notation for tensors=== | ||

| + | The cross product can alternatively be defined in terms of the Levi-Civita tensor ''E<sub>ijk</sub>'' and a dot product ''η<sup>mi</sup>'', which are useful in converting vector notation for tensor applications: | ||

| + | |||

| + | :<math>\mathbf{c} = \mathbf{a \times b} \Leftrightarrow\ c^m = \sum_{i=1}^3 \sum_{j=1}^3 \sum_{k=1}^3 \eta^{mi} E_{ijk} a^j b^k</math> | ||

| + | |||

| + | where the indices <math>i,j,k</math> correspond to vector components. This characterization of the cross product is often expressed more compactly using the Einstein summation convention as | ||

| + | :<math>\mathbf{c} = \mathbf{a \times b} \Leftrightarrow\ c^m = \eta^{mi} E_{ijk} a^j b^k</math> | ||

| + | |||

| + | in which repeated indices are summed over the values 1 to 3. | ||

| + | |||

| + | In a positively-oriented orthonormal basis ''η<sup>mi</sup>'' = δ<sup>''mi''</sup> (the Kronecker delta) and <math> E_{ijk} = \varepsilon_{ijk}</math> (the Levi-Civita symbol). In that case, this representation is another form of the skew-symmetric representation of the cross product: | ||

| + | |||

| + | :<math>[\varepsilon_{ijk} a^j] = [\mathbf{a}]_\times.</math> | ||

| + | |||

| + | In classical mechanics: representing the cross product by using the Levi-Civita symbol can cause mechanical symmetries to be obvious when physical systems are isotropic. (An example: consider a particle in a Hooke's Law potential in three-space, free to oscillate in three dimensions; none of these dimensions are "special" in any sense, so symmetries lie in the cross-product-represented angular momentum, which are made clear by the abovementioned Levi-Civita representation). | ||

| + | |||

| + | === Mnemonic === | ||

| + | [[File:cross_product_mnemonic.svg|thumb|Mnemonic to calculate a cross product in vector form]] | ||

| + | |||

| + | The word "xyzzy" can be used to remember the definition of the cross product. | ||

| + | |||

| + | If | ||

| + | |||

| + | :<math>\mathbf{a} = \mathbf{b} \times \mathbf{c}</math> | ||

| + | |||

| + | where: | ||

| + | |||

| + | :<math> | ||

| + | \mathbf{a} = \begin{bmatrix}a_x\\a_y\\a_z\end{bmatrix},\ | ||

| + | \mathbf{b} = \begin{bmatrix}b_x\\b_y\\b_z\end{bmatrix},\ | ||

| + | \mathbf{c} = \begin{bmatrix}c_x\\c_y\\c_z\end{bmatrix} | ||

| + | </math> | ||

| + | |||

| + | then: | ||

| + | |||

| + | :<math>a_x = b_y c_z - b_z c_y </math> | ||

| + | :<math>a_y = b_z c_x - b_x c_z </math> | ||

| + | :<math>a_z = b_x c_y - b_y c_x. </math> | ||

| + | |||

| + | The second and third equations can be obtained from the first by simply vertically rotating the subscripts, ''x'' → ''y'' → ''z'' → ''x''. The problem, of course, is how to remember the first equation, and two options are available for this purpose: either to remember the relevant two diagonals of Sarrus's scheme (those containing '''''i'''''), or to remember the xyzzy sequence. | ||

| + | |||

| + | Since the first diagonal in Sarrus's scheme is just the main diagonal of the above-mentioned 3×3 matrix, the first three letters of the word xyzzy can be very easily remembered. | ||

| + | |||

| + | === Cross visualization === | ||

| + | Similarly to the mnemonic device above, a "cross" or X can be visualized between the two vectors in the equation. This may be helpful for remembering the correct cross product formula. | ||

| + | |||

| + | If | ||

| + | |||

| + | :<math>\mathbf{a} = \mathbf{b} \times \mathbf{c}</math> | ||

| + | |||

| + | then: | ||

| + | |||

| + | :<math> | ||

| + | \mathbf{a} = | ||

| + | \begin{bmatrix}b_x\\b_y\\b_z\end{bmatrix} \times | ||

| + | \begin{bmatrix}c_x\\c_y\\c_z\end{bmatrix}. | ||

| + | </math> | ||

| + | |||

| + | If we want to obtain the formula for <math>a_x</math> we simply drop the <math>b_x</math> and <math>c_x</math> from the formula, and take the next two components down: | ||

| + | |||

| + | :<math> | ||

| + | a_x = | ||

| + | \begin{bmatrix}b_y\\b_z\end{bmatrix} \times | ||

| + | \begin{bmatrix}c_y\\c_z\end{bmatrix}. | ||

| + | </math> | ||

| + | |||

| + | When doing this for <math>a_y</math> the next two elements down should "wrap around" the matrix so that after the z component comes the x component. For clarity, when performing this operation for <math>a_y</math>, the next two components should be z and x (in that order). While for <math>a_z</math> the next two components should be taken as x and y. | ||

| + | |||

| + | :<math> | ||

| + | a_y = | ||

| + | \begin{bmatrix}b_z\\b_x\end{bmatrix} \times | ||

| + | \begin{bmatrix}c_z\\c_x\end{bmatrix},\ | ||

| + | a_z = | ||

| + | \begin{bmatrix}b_x\\b_y\end{bmatrix} \times | ||

| + | \begin{bmatrix}c_x\\c_y\end{bmatrix} | ||

| + | </math> | ||

| + | |||

| + | For <math>a_x</math> then, if we visualize the cross operator as pointing from an element on the left to an element on the right, we can take the first element on the left and simply multiply by the element that the cross points to in the right hand matrix. We then subtract the next element down on the left, multiplied by the element that the cross points to here as well. This results in our <math>a_x</math> formula – | ||

| + | |||

| + | :<math>a_x = b_y c_z - b_z c_y.</math> | ||

| + | |||

| + | We can do this in the same way for <math>a_y</math> and <math>a_z</math> to construct their associated formulas. | ||

| + | |||

| + | == Applications == | ||

| + | The cross product has applications in various contexts. For example, it is used in computational geometry, physics and engineering. | ||

| + | A non-exhaustive list of examples follows. | ||

| + | |||

| + | === Computational geometry === | ||

| + | The cross product appears in the calculation of the distance of two skew lines (lines not in the same plane) from each other in three-dimensional space. | ||

| + | |||

| + | The cross product can be used to calculate the normal for a triangle or polygon, an operation frequently performed in computer graphics. For example, the winding of a polygon (clockwise or anticlockwise) about a point within the polygon can be calculated by triangulating the polygon (like spoking a wheel) and summing the angles (between the spokes) using the cross product to keep track of the sign of each angle. | ||

| + | |||

| + | In computational geometry of the plane, the cross product is used to determine the sign of the acute angle defined by three points <math> p_1=(x_1,y_1), p_2=(x_2,y_2)</math> and <math> p_3=(x_3,y_3)</math>. It corresponds to the direction (upward or downward) of the cross product of the two coplanar vectors defined by the two pairs of points <math>(p_1, p_2)</math> and <math>(p_1, p_3)</math>. The sign of the acute angle is the sign of the expression | ||

| + | :<math> P = (x_2-x_1)(y_3-y_1)-(y_2-y_1)(x_3-x_1),</math> | ||

| + | which is the signed length of the cross product of the two vectors. | ||

| + | |||

| + | In the "right-handed" coordinate system, if the result is 0, the points are collinear; if it is positive, the three points constitute a positive angle of rotation around <math> p_1</math> from <math> p_2</math> to <math> p_3</math>, otherwise a negative angle. From another point of view, the sign of <math>P</math> tells whether <math> p_3</math> lies to the left or to the right of line <math> p_1, p_2.</math> | ||

| + | |||

| + | The cross product is used in calculating the volume of a polyhedron such as a tetrahedron or parallelepiped. | ||

| + | |||

| + | ===Angular momentum and torque=== | ||

| + | |||

| + | The angular momentum {{math|'''L'''}} of a particle about a given origin is defined as: | ||

| + | |||

| + | : <math>\mathbf{L} = \mathbf{r} \times \mathbf{p},</math> | ||

| + | |||

| + | where {{math|'''r'''}} is the position vector of the particle relative to the origin, {{math|'''p'''}} is the linear momentum of the particle. | ||

| + | |||

| + | In the same way, the moment {{math|'''M'''}} of a force {{math|'''F'''<sub>B</sub>}} applied at point B around point A is given as: | ||

| + | |||

| + | : <math> \mathbf{M}_\mathrm{A} = \mathbf{r}_\mathrm{AB} \times \mathbf{F}_\mathrm{B}\,</math> | ||

| + | |||

| + | In mechanics the ''moment of a force'' is also called ''torque'' and written as <math>\mathbf{\tau}</math> | ||

| + | |||

| + | Since position {{math|'''r'''}}, linear momentum {{math|'''p'''}} and force {{math|'''F'''}} are all ''true'' vectors, both the angular momentum {{math|'''L'''}} and the moment of a force {{math|'''M'''}} are ''pseudovectors'' or ''axial vectors''. | ||

| + | |||

| + | ===Rigid body=== | ||

| + | The cross product frequently appears in the description of rigid motions. Two points ''P'' and ''Q'' on a rigid body can be related by: | ||

| + | |||

| + | : <math>\mathbf{v}_P - \mathbf{v}_Q = \boldsymbol\omega \times \left( \mathbf{r}_P - \mathbf{r}_Q \right)\,</math> | ||

| + | |||

| + | where <math>\mathbf{r}</math> is the point's position, <math>\mathbf{v}</math> is its velocity and <math>\boldsymbol\omega</math> is the body's angular velocity. | ||

| + | |||

| + | Since position <math>\mathbf{r}</math> and velocity <math>\mathbf{v}</math> are ''true'' vectors, the angular velocity <math>\boldsymbol\omega</math> is a ''pseudovector'' or ''axial vector''. | ||

| + | |||

| + | ===Lorentz force=== | ||

| + | The cross product is used to describe the Lorentz force experienced by a moving electric charge {{math|''q<sub>e</sub>''}}: | ||

| + | : <math>\mathbf{F} = q_e \left( \mathbf{E}+ \mathbf{v} \times \mathbf{B} \right)</math> | ||

| + | |||

| + | Since velocity {{math|'''v'''}}, force {{math|'''F'''}} and electric field {{math|'''E'''}} are all ''true'' vectors, the magnetic field {{math|'''B'''}} is a ''pseudovector''. | ||

| + | |||

| + | === Other === | ||

| + | In vector calculus, the cross product is used to define the formula for the vector operator curl. | ||

| + | |||

| + | The trick of rewriting a cross product in terms of a matrix multiplication appears frequently in epipolar and multi-view geometry, in particular when deriving matching constraints. | ||

| + | |||

| + | == As an external product == | ||

| + | [[File:Exterior calc cross product.svg|right|thumb|The cross product in relation to the exterior product. In red are the orthogonal unit vector, and the "parallel" unit bivector.]] | ||

| + | The cross product can be defined in terms of the exterior product. It can be generalized to an external product in other than three dimensions. This view allows for a natural geometric interpretation of the cross product. In exterior algebra the exterior product of two vectors is a bivector. A bivector is an oriented plane element, in much the same way that a vector is an oriented line element. Given two vectors ''a'' and ''b'', one can view the bivector ''a'' ∧ ''b'' as the oriented parallelogram spanned by ''a'' and ''b''. The cross product is then obtained by taking the Hodge star of the bivector ''a'' ∧ ''b'', mapping 2-vectors to vectors: | ||

| + | |||

| + | :<math>a \times b = \star (a \wedge b) \,.</math> | ||

| + | |||

| + | This can be thought of as the oriented multi-dimensional element "perpendicular" to the bivector. Only in three dimensions is the result an oriented one-dimensional element – a vector – whereas, for example, in four dimensions the Hodge dual of a bivector is two-dimensional – a bivector. So, only in three dimensions can a vector cross product of ''a'' and ''b'' be defined as the vector dual to the bivector ''a'' ∧ ''b'': it is perpendicular to the bivector, with orientation dependent on the coordinate system's handedness, and has the same magnitude relative to the unit normal vector as ''a'' ∧ ''b'' has relative to the unit bivector; precisely the properties described above. | ||

| + | |||

| + | == Handedness == | ||

| + | |||

| + | === Consistency === | ||

| + | When physics laws are written as equations, it is possible to make an arbitrary choice of the coordinate system, including handedness. One should be careful to never write down an equation where the two sides do not behave equally under all transformations that need to be considered. For example, if one side of the equation is a cross product of two polar vectors, one must take into account that the result is an axial vector. Therefore, for consistency, the other side must also be an axial vector. More generally, the result of a cross product may be either a polar vector or an axial vector, depending on the type of its operands (polar vectors or axial vectors). Namely, polar vectors and axial vectors are interrelated in the following ways under application of the cross product: | ||

| + | |||

| + | * polar vector × polar vector = axial vector | ||

| + | * axial vector × axial vector = axial vector | ||

| + | * polar vector × axial vector = polar vector | ||

| + | * axial vector × polar vector = polar vector | ||

| + | or symbolically | ||

| + | * polar × polar = axial | ||

| + | * axial × axial = axial | ||

| + | * polar × axial = polar | ||

| + | * axial × polar = polar | ||

| + | |||

| + | Because the cross product may also be a polar vector, it may not change direction with a mirror image transformation. This happens, according to the above relationships, if one of the operands is a polar vector and the other one is an axial vector (e.g., the cross product of two polar vectors). For instance, a vector triple product involving three polar vectors is a polar vector. | ||

| + | |||

| + | A handedness-free approach is possible using exterior algebra. | ||

| + | |||

| + | === The paradox of the orthonormal basis === | ||

| + | |||

| + | Let ('''i''', '''j''','''k''') be an orthonormal basis. The vectors '''i''', '''j''' and '''k''' don't depend on the orientation of the space. They can even be defined in the absence of any orientation. They can not therefore be axial vectors. But if '''i''' and '''j''' are polar vectors then '''k''' is an axial vector for '''i''' × '''j''' = '''k''' or '''j''' × '''i''' = '''k'''. This is a paradox. | ||

| + | |||

| + | "Axial" and "polar" are ''physical'' qualifiers for ''physical'' vectors; that is, vectors which represent ''physical'' quantities such as the velocity or the magnetic field. The vectors '''i''', '''j''' and '''k''' are mathematical vectors, neither axial nor polar. In mathematics, the cross-product of two vectors is a vector. There is no contradiction. | ||

==Resources== | ==Resources== | ||

* [https://openstax.org/books/calculus-volume-3/pages/2-4-the-cross-product The Cross Product], OpenStax | * [https://openstax.org/books/calculus-volume-3/pages/2-4-the-cross-product The Cross Product], OpenStax | ||

| + | * [https://omega0.xyz/omega8008/calc3/cross-product-dir/cornell-lecture.html Cross Product], Cornell University | ||

| + | |||

| + | == Licensing == | ||

| + | Content obtained and/or adapted from: | ||

| + | * [https://en.wikipedia.org/wiki/Cross_product Cross product, Wikipedia] under a CC BY-SA license | ||

Latest revision as of 13:57, 20 January 2022

In mathematics, the cross product or vector product (occasionally directed area product, to emphasize its geometric significance) is a binary operation on two vectors in a three-dimensional oriented Euclidean vector space (named here ), and is denoted by the symbol . Given two linearly independent vectors a and b, the cross product, a × b (read "a cross b"), is a vector that is perpendicular to both a and b, and thus normal to the plane containing them. It has many applications in mathematics, physics, engineering, and computer programming. It should not be confused with the dot product (projection product).

If two vectors have the same direction or have the exact opposite direction from each other (that is, they are not linearly independent), or if either one has zero length, then their cross product is zero. More generally, the magnitude of the product equals the area of a parallelogram with the vectors for sides; in particular, the magnitude of the product of two perpendicular vectors is the product of their lengths.

The cross product is anticommutative (that is, a × b = − b × a) and is distributive over addition (that is, a × (b + c) = a × b + a × c). The space together with the cross product is an algebra over the real numbers, which is neither commutative nor associative, but is a Lie algebra with the cross product being the Lie bracket.

Like the dot product, it depends on the metric of Euclidean space, but unlike the dot product, it also depends on a choice of orientation (or "handedness") of the space (it's why an oriented space is needed). In connection with the cross product, the exterior product of vectors can be used in arbitrary dimensions (with a bivector or 2-form result) and is independent of the orientation of the space.

The product can be generalized in various ways, using the orientation and metric structure just as for the traditional 3-dimensional cross product, one can, in n dimensions, take the product of n − 1 vectors to produce a vector perpendicular to all of them. But if the product is limited to non-trivial binary products with vector results, it exists only in three and seven dimensions.

Contents

Definition

The cross product of two vectors a and b is defined only in three-dimensional space and is denoted by a × b. In physics and applied mathematics, the wedge notation a ∧ b is often used (in conjunction with the name vector product), although in pure mathematics such notation is usually reserved for just the exterior product, an abstraction of the vector product to n dimensions.

The cross product a × b is defined as a vector c that is perpendicular (orthogonal) to both a and b, with a direction given by the right-hand rule and a magnitude equal to the area of the parallelogram that the vectors span.

The cross product is defined by the formula

where:

- θ is the angle between a and b in the plane containing them (hence, it is between 0° and 180°)

- ‖a‖ and ‖b‖ are the magnitudes of vectors a and b

- and n is a unit vector perpendicular to the plane containing a and b, in the direction given by the right-hand rule (illustrated).

If the vectors a and b are parallel (that is, the angle θ between them is either 0° or 180°), by the above formula, the cross product of a and b is the zero vector 0.

Direction

By convention, the direction of the vector n is given by the right-hand rule, where one simply points the forefinger of the right hand in the direction of a and the middle finger in the direction of b. Then, the vector n is coming out of the thumb (see the adjacent picture). Using this rule implies that the cross product is anti-commutative; that is, b × a = −(a × b). By pointing the forefinger toward b first, and then pointing the middle finger toward a, the thumb will be forced in the opposite direction, reversing the sign of the product vector.

As the cross product operator depends on the orientation of the space (as explicit in the definition above), the cross product of two vectors is not a "true" vector, but a pseudovector.

Names

In 1881, Josiah Willard Gibbs, and independently Oliver Heaviside, introduced both the dot product and the cross product using a period (a . b) and an "x" (a x b), respectively, to denote them.

In 1877, to emphasize the fact that the result of a dot product is a scalar while the result of a cross product is a vector, William Kingdon Clifford coined the alternative names scalar product and vector product for the two operations. These alternative names are still widely used in the literature.

Both the cross notation (a × b) and the name cross product were possibly inspired by the fact that each scalar component of a × b is computed by multiplying non-corresponding components of a and b. Conversely, a dot product a ⋅ b involves multiplications between corresponding components of a and b. As explained below, the cross product can be expressed in the form of a determinant of a special 3 × 3 matrix. According to Sarrus's rule, this involves multiplications between matrix elements identified by crossed diagonals.

Computing

Coordinate notation

If (i, j,k) is a positively oriented orthonormal basis, the basis vectors satisfy the following equalities

which imply, by the anticommutativity of the cross product, that

The anticommutativity of the cross product (and the obvious lack of linear independence) also implies that

- (the zero vector).

These equalities, together with the distributivity and linearity of the cross product (though neither follows easily from the definition given above), are sufficient to determine the cross product of any two vectors a and b. Each vector can be defined as the sum of three orthogonal components parallel to the standard basis vectors:

Their cross product a × b can be expanded using distributivity:

This can be interpreted as the decomposition of a × b into the sum of nine simpler cross products involving vectors aligned with i, j, or k. Each one of these nine cross products operates on two vectors that are easy to handle as they are either parallel or orthogonal to each other. From this decomposition, by using the above-mentioned equalities and collecting similar terms, we obtain:

meaning that the three scalar components of the resulting vector s = s1i + s2j + s3k = a × b are

Using column vectors, we can represent the same result as follows:

Matrix notation

The cross product can also be expressed as the formal determinant:

This determinant can be computed using Sarrus's rule or cofactor expansion. Using Sarrus's rule, it expands to

Using cofactor expansion along the first row instead, it expands to

which gives the components of the resulting vector directly.

Using Levi-Civita tensors

- In any basis, the cross-product is given by the tensorial formula where is the covariant Levi-Civita tensor (we note the position of the indices). That corresponds to the intrinsic formula given here.

- In an orthonormal basis having the same orientation as the space, is given by the pseudo-tensorial formula where is the Levi-Civita symbol (which is a pseudo-tensor). That’s the formula used for everyday physics but it works only for this special choice of basis.

- In any orthonormal basis, is given by the pseudo-tensorial formula where indicates whether the basis has the same orientation as the space or not.

The latter formula avoids having to change the orientation of the space when we inverse an orthonormal basis.

Properties

Geometric meaning

The magnitude of the cross product can be interpreted as the positive area of the parallelogram having a and b as sides (see Figure 1):

Indeed, one can also compute the volume V of a parallelepiped having a, b and c as edges by using a combination of a cross product and a dot product, called scalar triple product (see Figure 2):

Since the result of the scalar triple product may be negative, the volume of the parallelepiped is given by its absolute value:

Because the magnitude of the cross product goes by the sine of the angle between its arguments, the cross product can be thought of as a measure of perpendicularity in the same way that the dot product is a measure of parallelism. Given two unit vectors, their cross product has a magnitude of 1 if the two are perpendicular and a magnitude of zero if the two are parallel. The dot product of two unit vectors behaves just oppositely: it is zero when the unit vectors are perpendicular and 1 if the unit vectors are parallel.

Unit vectors enable two convenient identities: the dot product of two unit vectors yields the cosine (which may be positive or negative) of the angle between the two unit vectors. The magnitude of the cross product of the two unit vectors yields the sine (which will always be positive).

Algebraic properties

If the cross product of two vectors is the zero vector (that is, a × b = 0), then either one or both of the inputs is the zero vector, (a = 0 or b = 0) or else they are parallel or antiparallel (a ∥ b) so that the sine of the angle between them is zero (θ = 0° or θ = 180° and sin θ = 0).

The self cross product of a vector is the zero vector:

The cross product is anticommutative,

distributive over addition,

and compatible with scalar multiplication so that

It is not associative, but satisfies the Jacobi identity:

Distributivity, linearity and Jacobi identity show that the R3 vector space together with vector addition and the cross product forms a Lie algebra, the Lie algebra of the real orthogonal group in 3 dimensions, SO(3). The cross product does not obey the cancellation law; that is, a × b = a × c with a ≠ 0 does not imply b = c, but only that:

This can be the case where b and c cancel, but additionally where a and b − c are parallel; that is, they are related by a scale factor t, leading to:

for some scalar t.

If, in addition to a × b = a × c and a ≠ 0 as above, it is the case that a ⋅ b = a ⋅ c then

As b − c cannot be simultaneously parallel (for the cross product to be 0) and perpendicular (for the dot product to be 0) to a, it must be the case that b and c cancel: b = c.

From the geometrical definition, the cross product is invariant under proper rotations about the axis defined by a × b. In formulae:

- , where is a rotation matrix with .

More generally, the cross product obeys the following identity under matrix transformations:

where is a 3-by-3 matrix and is the transpose of the inverse and is the cofactor matrix. It can be readily seen how this formula reduces to the former one if is a rotation matrix.

The cross product of two vectors lies in the null space of the 2 × 3 matrix with the vectors as rows:

For the sum of two cross products, the following identity holds:

Differentiation

The product rule of differential calculus applies to any bilinear operation, and therefore also to the cross product:

where a and b are vectors that depend on the real variable t.

Triple product expansion

The cross product is used in both forms of the triple product. The scalar triple product of three vectors is defined as

It is the signed volume of the parallelepiped with edges a, b and c and as such the vectors can be used in any order that's an even permutation of the above ordering. The following therefore are equal:

The vector triple product is the cross product of a vector with the result of another cross product, and is related to the dot product by the following formula

The mnemonic "BAC minus CAB" is used to remember the order of the vectors in the right hand member. This formula is used in physics to simplify vector calculations. A special case, regarding gradients and useful in vector calculus, is

where ∇2 is the vector Laplacian operator.

Other identities relate the cross product to the scalar triple product:

where I is the identity matrix.

Alternative formulation

The cross product and the dot product are related by:

The right-hand side is the Gram determinant of a and b, the square of the area of the parallelogram defined by the vectors. This condition determines the magnitude of the cross product. Namely, since the dot product is defined, in terms of the angle θ between the two vectors, as:

the above given relationship can be rewritten as follows:

Invoking the Pythagorean trigonometric identity one obtains:

which is the magnitude of the cross product expressed in terms of θ, equal to the area of the parallelogram defined by a and b.

The combination of this requirement and the property that the cross product be orthogonal to its constituents a and b provides an alternative definition of the cross product.

Lagrange's identity

The relation:

can be compared with another relation involving the right-hand side, namely Lagrange's identity expressed as:

where a and b may be n-dimensional vectors. This also shows that the Riemannian volume form for surfaces is exactly the surface element from vector calculus. In the case where n = 3, combining these two equations results in the expression for the magnitude of the cross product in terms of its components:

The same result is found directly using the components of the cross product found from:

In R3, Lagrange's equation is a special case of the multiplicativity |vw| = |v||w| of the norm in the quaternion algebra.

It is a special case of another formula, also sometimes called Lagrange's identity, which is the three dimensional case of the Binet–Cauchy identity:

If a = c and b = d this simplifies to the formula above.

Infinitesimal generators of rotations

The cross product conveniently describes the infinitesimal generators of rotations in R3. Specifically, if n is a unit vector in R3 and R(φ, n) denotes a rotation about the axis through the origin specified by n, with angle φ (measured in radians, counterclockwise when viewed from the tip of n), then

for every vector x in R3. The cross product with n therefore describes the infinitesimal generator of the rotations about n. These infinitesimal generators form the Lie algebra so(3) of the rotation group SO(3), and we obtain the result that the Lie algebra R3 with cross product is isomorphic to the Lie algebra so(3).

Alternative ways to compute

Conversion to matrix multiplication

The vector cross product also can be expressed as the product of a skew-symmetric matrix and a vector:

where superscript T refers to the transpose operation, and [a]× is defined by:

The columns [a]×,i of the skew-symmetric matrix for a vector a can be also obtained by calculating the cross product with unit vectors. That is,

or

where is the outer product operator.

Also, if a is itself expressed as a cross product:

then

Proof by substitution

Evaluation of the cross product gives

Hence, the left hand side equals

Now, for the right hand side,

And its transpose is

Evaluation of the right hand side gives

Comparison shows that the left hand side equals the right hand side.

This result can be generalized to higher dimensions using geometric algebra. In particular in any dimension bivectors can be identified with skew-symmetric matrices, so the product between a skew-symmetric matrix and vector is equivalent to the grade-1 part of the product of a bivector and vector. In three dimensions bivectors are dual to vectors so the product is equivalent to the cross product, with the bivector instead of its vector dual. In higher dimensions the product can still be calculated but bivectors have more degrees of freedom and are not equivalent to vectors.

This notation is also often much easier to work with, for example, in epipolar geometry.

From the general properties of the cross product follows immediately that

- and

and from fact that [a]× is skew-symmetric it follows that

The above-mentioned triple product expansion (bac–cab rule) can be easily proven using this notation.

As mentioned above, the Lie algebra R3 with cross product is isomorphic to the Lie algebra so(3), whose elements can be identified with the 3×3 skew-symmetric matrices. The map a → [a]× provides an isomorphism between R3 and so(3). Under this map, the cross product of 3-vectors corresponds to the commutator of 3x3 skew-symmetric matrices.

Matrix conversion for cross product with canonical base vectors

Denoting with the -th canonical base vector, the cross product of a generic vector with is given by: , where

These matrices share the following properties:

- (skew-symmetric);

- Both trace and determinant are zero;

- ;

- (see below);

The orthogonal projection matrix of a vector is given by . The projection matrix onto the orthogonal complement is given by , where is the identity matrix. For the special case of , it can be verified that

For other properties of orthogonal projection matrices, see projection (linear algebra).

Index notation for tensors

The cross product can alternatively be defined in terms of the Levi-Civita tensor Eijk and a dot product ηmi, which are useful in converting vector notation for tensor applications:

where the indices correspond to vector components. This characterization of the cross product is often expressed more compactly using the Einstein summation convention as

in which repeated indices are summed over the values 1 to 3.

In a positively-oriented orthonormal basis ηmi = δmi (the Kronecker delta) and (the Levi-Civita symbol). In that case, this representation is another form of the skew-symmetric representation of the cross product:

In classical mechanics: representing the cross product by using the Levi-Civita symbol can cause mechanical symmetries to be obvious when physical systems are isotropic. (An example: consider a particle in a Hooke's Law potential in three-space, free to oscillate in three dimensions; none of these dimensions are "special" in any sense, so symmetries lie in the cross-product-represented angular momentum, which are made clear by the abovementioned Levi-Civita representation).

Mnemonic

The word "xyzzy" can be used to remember the definition of the cross product.

If

where:

then:

The second and third equations can be obtained from the first by simply vertically rotating the subscripts, x → y → z → x. The problem, of course, is how to remember the first equation, and two options are available for this purpose: either to remember the relevant two diagonals of Sarrus's scheme (those containing i), or to remember the xyzzy sequence.

Since the first diagonal in Sarrus's scheme is just the main diagonal of the above-mentioned 3×3 matrix, the first three letters of the word xyzzy can be very easily remembered.

Cross visualization

Similarly to the mnemonic device above, a "cross" or X can be visualized between the two vectors in the equation. This may be helpful for remembering the correct cross product formula.

If

then:

If we want to obtain the formula for we simply drop the and from the formula, and take the next two components down:

When doing this for the next two elements down should "wrap around" the matrix so that after the z component comes the x component. For clarity, when performing this operation for , the next two components should be z and x (in that order). While for the next two components should be taken as x and y.

For then, if we visualize the cross operator as pointing from an element on the left to an element on the right, we can take the first element on the left and simply multiply by the element that the cross points to in the right hand matrix. We then subtract the next element down on the left, multiplied by the element that the cross points to here as well. This results in our formula –

We can do this in the same way for and to construct their associated formulas.

Applications

The cross product has applications in various contexts. For example, it is used in computational geometry, physics and engineering. A non-exhaustive list of examples follows.

Computational geometry

The cross product appears in the calculation of the distance of two skew lines (lines not in the same plane) from each other in three-dimensional space.

The cross product can be used to calculate the normal for a triangle or polygon, an operation frequently performed in computer graphics. For example, the winding of a polygon (clockwise or anticlockwise) about a point within the polygon can be calculated by triangulating the polygon (like spoking a wheel) and summing the angles (between the spokes) using the cross product to keep track of the sign of each angle.

In computational geometry of the plane, the cross product is used to determine the sign of the acute angle defined by three points and . It corresponds to the direction (upward or downward) of the cross product of the two coplanar vectors defined by the two pairs of points and . The sign of the acute angle is the sign of the expression

which is the signed length of the cross product of the two vectors.

In the "right-handed" coordinate system, if the result is 0, the points are collinear; if it is positive, the three points constitute a positive angle of rotation around from to , otherwise a negative angle. From another point of view, the sign of tells whether lies to the left or to the right of line

The cross product is used in calculating the volume of a polyhedron such as a tetrahedron or parallelepiped.

Angular momentum and torque

The angular momentum L of a particle about a given origin is defined as:

where r is the position vector of the particle relative to the origin, p is the linear momentum of the particle.

In the same way, the moment M of a force FB applied at point B around point A is given as:

In mechanics the moment of a force is also called torque and written as

Since position r, linear momentum p and force F are all true vectors, both the angular momentum L and the moment of a force M are pseudovectors or axial vectors.

Rigid body

The cross product frequently appears in the description of rigid motions. Two points P and Q on a rigid body can be related by:

where is the point's position, is its velocity and is the body's angular velocity.

Since position and velocity are true vectors, the angular velocity is a pseudovector or axial vector.

Lorentz force

The cross product is used to describe the Lorentz force experienced by a moving electric charge qe:

Since velocity v, force F and electric field E are all true vectors, the magnetic field B is a pseudovector.

Other